[top]add_layer

In dlib, a deep neural network is composed of 3 main parts. An

input layer, a bunch of

computational layers,

and optionally a

loss layer. The add_layer

class is the central object which adds a computational layer onto an

input layer or an entire network. Therefore, deep neural networks are created

by stacking many layers on top of each other using the add_layer class.

For a tutorial showing how this is accomplished read

the DNN Introduction part 1 and

DNN Introduction part 2.

C++ Example Programs:

dnn_introduction_ex.cpp,

dnn_introduction2_ex.cpp,

dnn_inception_ex.cpp,

dnn_imagenet_ex.cpp,

dnn_imagenet_train_ex.cpp,

dnn_mmod_ex.cpp,

dnn_mmod_find_cars_ex.cpp,

dnn_mmod_find_cars2_ex.cpp,

dnn_mmod_train_find_cars_ex.cpp,

dnn_mmod_face_detection_ex.cpp,

dnn_mmod_dog_hipsterizer.cpp,

dnn_metric_learning_ex.cpp,

dnn_metric_learning_on_images_ex.cpp,

dnn_face_recognition_ex.cpp [top]add_loss_layer

This object is a tool for stacking a

loss layer

on the top of a deep neural network.

C++ Example Programs:

dnn_introduction_ex.cpp,

dnn_introduction2_ex.cpp,

dnn_inception_ex.cpp,

dnn_imagenet_ex.cpp,

dnn_imagenet_train_ex.cpp,

dnn_mmod_ex.cpp,

dnn_mmod_find_cars_ex.cpp,

dnn_mmod_train_find_cars_ex.cpp,

dnn_metric_learning_ex.cpp,

dnn_metric_learning_on_images_ex.cpp,

dnn_face_recognition_ex.cpp,

dnn_mmod_face_detection_ex.cpp,

dnn_mmod_dog_hipsterizer.cpp [top]add_skip_layer

This object adds a new layer to a deep neural network which draws its input

from a

tagged layer rather than from

the immediate predecessor layer as is normally done.

For a tutorial showing how to use tagging see the

dnn_introduction2_ex.cpp

example program.

[top]add_tag_layer

This object is a tool for tagging layers in a deep neural network. These tags make it

easy to refer to the tagged layer in other parts of your code.

Specifically, this object adds a new layer onto a deep neural network.

However, this layer simply performs the identity transform.

This means it is a no-op and its presence does not change the

behavior of the network. It exists solely to be used by

add_skip_layer or

layer() to reference a

particular part of a network.

For a tutorial showing how to use tagging see the

dnn_introduction2_ex.cpp

example program.

C++ Example Programs:

dnn_introduction2_ex.cpp [top]alias_tensor

This object is a

tensor that

aliases another tensor. That is, it doesn't have its own block of

memory but instead simply holds pointers to the memory of another

tensor object. It therefore allows you to efficiently break a tensor

into pieces and pass those pieces into functions.

[top]approximate_distance_function

This function attempts to find a

distance_function object which is close

to a target distance_function. That is, it searches for an X such that target(X) is

minimized. Critically, X may be set to use fewer basis vectors than the target.

The optimization begins with an initial guess supplied by the user

and searches for an X which locally minimizes target(X). Since

this problem can have many local minima the quality of the starting point

can significantly influence the results.

[top]assignment_function

This object is a tool for solving the optimal assignment problem given a

user defined method for computing the quality of any particular assignment.

C++ Example Programs:

assignment_learning_ex.cpp [top]average_precision

This function computes the average precision of a ranking.

[top]batch_cached

This is a convenience function for creating

batch_trainer objects that are setup

to use a kernel matrix cache.

[top]batch_trainer

This is a batch trainer object that is meant to wrap online trainer objects

that create

decision_functions. It

turns an online learning algorithm such as

svm_pegasos

into a batch learning object. This allows you to use objects like

svm_pegasos with functions (e.g.

cross_validate_trainer)

that expect batch mode training objects.

[top]bottom_up_cluster

This function runs a bottom up agglomerative clustering algorithm.

[top]cca

This function performs a canonical correlation analysis between two sets

of vectors. Additionally, it is designed to be very fast, even for large

datasets of over a million high dimensional vectors.

[top]chinese_whispers

This function performs the clustering algorithm described in the paper

Chinese Whispers - an Efficient Graph Clustering Algorithm and its

Application to Natural Language Processing Problems by Chris Biemann.

In particular, this is a method for automatically clustering the nodes in a

graph into groups. The method is able to automatically determine the number

of clusters.

C++ Example Programs:

dnn_face_recognition_ex.cppPython Example Programs:

face_clustering.py [top]compute_lda_transform

This function performs the dimensionality reducing version of linear

discriminant analysis. That is, you give it a set of labeled vectors and it

returns a linear transform that maps the input vectors into a new space that

is good for distinguishing between the different classes.

[top]compute_mean_squared_distance

This is a function that simply finds the average squared distance between all

pairs of a set of data samples. It is often convenient to use the reciprocal

of this value as the estimate of the gamma parameter of the

radial_basis_kernel.

[top]compute_roc_curve

This function computes a ROC curve (receiver operating characteristic curve).

[top]count_ranking_inversions

Given two sets of objects, X and Y, and an ordering relationship defined

between their elements, this function counts how many times we see an element

in the set Y ordered before an element in the set X. Additionally, this

routine executes efficiently in O(n*log(n)) time via the use of quick sort.

[top]cross_validate_ranking_trainer

Performs k-fold cross validation on a user supplied ranking trainer object such

as the

svm_rank_trainer

and returns the fraction of ranking pairs ordered correctly as well as the mean

average precision.

C++ Example Programs:

svm_rank_ex.cppPython Example Programs:

svm_rank.py [top]cross_validate_regression_trainer

Performs k-fold cross validation on a user supplied regression trainer object such

as the

svr_trainer and returns the mean squared error

and R-squared value.

C++ Example Programs:

svr_ex.cpp [top]cross_validate_trainer_threaded

Performs k-fold cross validation on a user supplied binary classification trainer object such

as the

svm_nu_trainer or

rbf_network_trainer.

This function does the same thing as

cross_validate_trainer

except this function also allows you to specify how many threads of execution to use.

So you can use this function to take advantage of a multi-core system to perform

cross validation faster.

[top]decision_function

This object represents a classification or regression function that was

learned by a kernel based learning algorithm. Therefore, it is a function

object that takes a sample object and returns a scalar value.

C++ Example Programs:

svm_ex.cpp [top]discriminant_pca

This object implements the Discriminant PCA technique described in the paper:

A New Discriminant Principal Component Analysis Method with Partial Supervision (2009)

by Dan Sun and Daoqiang Zhang

This algorithm is basically a straightforward generalization of the classical PCA

technique to handle partially labeled data. It is useful if you want to learn a linear

dimensionality reduction rule using a bunch of data that is partially labeled.

[top]distance_function

This object represents a point in kernel induced feature space.

You may use this object to find the distance from the point it

represents to points in input space as well as other points

represented by distance_functions.

[top]empirical_kernel_map

This object represents a map from objects of sample_type (the kind of object

a kernel function

operates on) to finite dimensional column vectors which

represent points in the kernel feature space defined by whatever kernel

is used with this object.

To use the empirical_kernel_map you supply it with a particular kernel and a set of

basis samples. After that you can present it with new samples and it will project

them into the part of kernel feature space spanned by your basis samples.

This means the empirical_kernel_map is a tool you can use to very easily kernelize

any algorithm that operates on column vectors. All you have to do is select a

set of basis samples and then use the empirical_kernel_map to project all your

data points into the part of kernel feature space spanned by those basis samples.

Then just run your normal algorithm on the output vectors and it will be effectively

kernelized.

Regarding methods to select a set of basis samples, if you are working with only a

few thousand samples then you can just use all of them as basis samples.

Alternatively, the

linearly_independent_subset_finder

often works well for selecting a basis set. I also find that picking a

random subset typically works well.

C++ Example Programs:

empirical_kernel_map_ex.cpp,

linear_manifold_regularizer_ex.cpp [top]equal_error_rate

This function finds a threshold that best separates the elements of two

vectors by selecting the threshold with equal error rate. It also reports

the value of the equal error rate.

[top]find_clusters_using_angular_kmeans

This is a simple linear kmeans clustering implementation.

To compare a sample to a cluster, it measures the angle between them

with respect to the origin. Therefore, it tries to find clusters

of points that all have small angles between each cluster member.

[top]find_clusters_using_kmeans

This is a simple linear kmeans clustering implementation.

It uses Euclidean distance to compare samples.

[top]find_gamma_with_big_centroid_gap

This is a function that tries to pick a reasonable default value for the

gamma parameter of the

radial_basis_kernel. It

picks the parameter that gives the largest separation between the centroids, in

kernel feature space, of two classes of data.

C++ Example Programs:

rank_features_ex.cpp [top]fix_nonzero_indexing

This is a simple function that takes a std::vector of

sparse vectors

and makes sure they are zero-indexed (e.g. makes sure the first index value is zero).

[top]graph_labeler

This object is a tool for labeling each node in a

graph

with a value of true or false, subject to a labeling consistency constraint between

nodes that share an edge. In particular, this object is useful for

representing a graph labeling model learned via some machine learning

method, such as the

structural_graph_labeling_trainer.

C++ Example Programs:

graph_labeling_ex.cpp [top]histogram_intersection_kernel

This object represents a histogram intersection kernel for use with

kernel learning machines.

[top]input_rgb_image

This is a simple input layer type for use in a deep neural network

which takes an RGB image as input and loads it into a network. It

is very similar to the

input layer except that

it allows you to subtract the average color value from each color

channel when converting an image to a tensor.

[top]input_rgb_image_sized

This layer has an interface and behavior identical to

input_rgb_image

except that it requires input images to have a particular size.

[top]is_assignment_problem

This function takes a set of training data for an assignment problem

and reports back if it could possibly be a well formed assignment problem.

[top]is_binary_classification_problem

This function simply takes two vectors, the first containing feature vectors and

the second containing labels, and reports back if the two could possibly

contain data for a well formed classification problem.

[top]is_forced_assignment_problem

This function takes a set of training data for a forced assignment problem

and reports back if it could possibly be a well formed forced assignment problem.

[top]is_graph_labeling_problem

This function takes a set of training data for a graph labeling problem

and reports back if it could possibly be a well formed problem.

[top]is_learning_problem

This function simply takes two vectors, the first containing feature vectors and

the second containing labels, and reports back if the two could possibly

contain data for a well formed learning problem. In this case it just means

that the two vectors have the same length and aren't empty.

[top]is_ranking_problem

This function takes a set of training data for a learning-to-rank problem

and reports back if it could possibly be a well formed problem.

[top]is_sequence_labeling_problem

This function takes a set of training data for a sequence labeling problem

and reports back if it could possibly be a well formed sequence labeling problem.

[top]is_sequence_segmentation_problem

This function takes a set of training data for a sequence segmentation problem

and reports back if it could possibly be a well formed sequence segmentation problem.

[top]is_track_association_problem

This function takes a set of training data for a track association learning problem

and reports back if it could possibly be a well formed track association problem.

[top]kcentroid

This object represents a weighted sum of sample points in a kernel induced

feature space. It can be used to kernelize any algorithm that requires only

the ability to perform vector addition, subtraction, scalar multiplication,

and inner products.

An example use of this object is as an online algorithm for recursively estimating

the centroid of a sequence of training points. This object then allows you to

compute the distance between the centroid and any test points. So you can use

this object to predict how similar a test point is to the data this object has

been trained on (larger distances from the centroid indicate dissimilarity/anomalous

points).

The object internally keeps a set of "dictionary vectors"

that are used to represent the centroid. It manages these vectors using the

sparsification technique described in the paper The Kernel Recursive Least

Squares Algorithm by Yaakov Engel. This technique allows us to keep the

number of dictionary vectors down to a minimum. In fact, the object has a

user selectable tolerance parameter that controls the trade off between

accuracy and number of stored dictionary vectors.

C++ Example Programs:

kcentroid_ex.cpp [top]kernel_matrix

This is a simple set of functions that makes it easy to turn a kernel

object and a set of samples into a kernel matrix. It takes these two

things and returns a

matrix expression

that represents the kernel matrix.

[top]kkmeans

This is an implementation of a kernelized k-means clustering algorithm.

It performs k-means clustering by using the

kcentroid object.

If you want to use the linear kernel (i.e. do a normal k-means clustering) then you

should use the find_clusters_using_kmeans routine.

C++ Example Programs:

kkmeans_ex.cpp [top]krls

This is an implementation of the kernel recursive least squares algorithm

described in the paper The Kernel Recursive Least Squares Algorithm by Yaakov Engel.

The long and short of this algorithm is that it is an online kernel based

regression algorithm. You give it samples (x,y) and it learns the function

f(x) == y. For a detailed description of the algorithm read the above paper.

Note that if you want to use the linear kernel then you would

be better off using the rls object as it

is optimized for this case.

C++ Example Programs:

krls_ex.cpp,

krls_filter_ex.cpp [top]learn_platt_scaling

This function is an implementation of the algorithm described in the following

papers:

Probabilistic Outputs for Support Vector Machines and Comparisons to

Regularized Likelihood Methods by John C. Platt. March 26, 1999

A Note on Platt's Probabilistic Outputs for Support Vector Machines

by Hsuan-Tien Lin, Chih-Jen Lin, and Ruby C. Weng

This function is the tool used to implement the

train_probabilistic_decision_function routine.

[top]linearly_independent_subset_finder

This is an implementation of an online algorithm for recursively finding a

set (aka dictionary) of linearly independent vectors in a kernel induced

feature space. To use it you decide how large you would like the dictionary

to be and then you feed it sample points.

The implementation uses the Approximately Linearly Dependent metric described

in the paper The Kernel Recursive Least Squares Algorithm by Yaakov Engel to

decide which points are more linearly independent than others. The metric is

simply the squared distance between a test point and the subspace spanned by

the set of dictionary vectors.

Each time you present this object with a new sample point

it calculates the projection distance and if it is sufficiently large then this

new point is included into the dictionary. Note that this object can be configured

to have a maximum size. Once the max dictionary size is reached each new point

kicks out a previous point. This is done by removing the dictionary vector that

has the smallest projection distance onto the others. That is, the "least linearly

independent" vector is removed to make room for the new one.

C++ Example Programs:

empirical_kernel_map_ex.cpp [top]linear_kernel

This object represents a linear function kernel for use with

kernel learning machines.

[top]linear_manifold_regularizer

Many learning algorithms attempt to minimize a function that, at a high

level, looks like this:

f(w) == complexity + training_set_error

The idea is to find the set of parameters, w, that gives low error on

your training data but also is not "complex" according to some particular

measure of complexity. This strategy of penalizing complexity is

usually called regularization.

In the above setting, all the training data consists of labeled samples.

However, it would be nice to be able to benefit from unlabeled data.

The idea of manifold regularization is to extract useful information from

unlabeled data by first defining which data samples are "close" to each other

(perhaps by using their 3 nearest neighbors)

and then adding a term to

the above function that penalizes any decision rule which produces

different outputs on data samples which we have designated as being close.

It turns out that it is possible to transform these manifold regularized learning

problems into the normal form shown above by applying a certain kind of

preprocessing to all our data samples. Once this is done we can use a

normal learning algorithm, such as the svm_c_linear_trainer,

on just the

labeled data samples and obtain the same output as the manifold regularized

learner would have produced.

The linear_manifold_regularizer is a tool for creating this preprocessing

transformation. In particular, the transformation is linear. That is, it

is just a matrix you multiply with all your samples. For a more detailed

discussion of this topic you should consult the following paper. In

particular, see section 4.2. This object computes the inverse T matrix

described in that section.

Linear Manifold Regularization for Large Scale Semi-supervised Learning

by Vikas Sindhwani, Partha Niyogi, and Mikhail Belkin

C++ Example Programs:

linear_manifold_regularizer_ex.cpp [top]load_image_dataset_metadata

dlib comes with a graphical tool for annotating images with

labeled rectangles. The tool produces an XML file containing these

annotations. Therefore, load_image_dataset_metadata() is a routine

for parsing these XML files. Note also that this is the metadata

format used by the image labeling tool included with dlib in the

tools/imglab folder.

[top]load_libsvm_formatted_data

This is a function that loads the data from a file that uses

the LIBSVM format. It loads the data into a std::vector of

sparse vectors.

If you want to load data into dense vectors (i.e.

dlib::matrix objects) then you can use the

sparse_to_dense

function to perform the conversion. Also, some LIBSVM formatted files number

their features beginning with 1 rather than 0. If this bothers you, then you

can fix it by using the

fix_nonzero_indexing function

on the data after it is loaded.

[top]loss_mean_squared_

This object is a

loss layer

for a deep neural network. In particular, it implements the mean squared loss, which is

appropriate for regression problems.

[top]loss_mean_squared_multioutput_

This object is a

loss layer

for a deep neural network. In particular, it implements the mean squared loss, which is

appropriate for regression problems. It is identical to the

loss_mean_squared_

loss except this version supports multiple output values.

[top]lspi

This object is an implementation of the reinforcement learning algorithm

described in the following paper:

Lagoudakis, Michail G., and Ronald Parr. "Least-squares policy

iteration." The Journal of Machine Learning Research 4 (2003):

1107-1149.

[top]mlp

This object represents a multilayer layer perceptron network that is

trained using the back propagation algorithm. The training algorithm also

incorporates the momentum method. That is, each round of back propagation

training also adds a fraction of the previous update. This fraction

is controlled by the momentum term set in the constructor.

It is worth noting that a MLP is, in general, very inferior to modern

kernel algorithms such as the support vector machine. So if you haven't

tried any other techniques with your data you really should.

C++ Example Programs:

mlp_ex.cppImplementations:mlp_kernel_1:

This is implemented in the obvious way.

kernel_1a | is a typedef for mlp_kernel_1 |

kernel_1a_c |

is a typedef for kernel_1a that checks its preconditions.

|

[top]modularity

This function computes the modularity of a particular graph clustering. This

is a number that tells you how good the clustering is. In particular, it

is the measure optimized by the

newman_cluster

routine.

[top]multiclass_linear_decision_function

This object represents a multiclass classifier built out of a set of

binary classifiers. Each binary classifier is used to vote for the

correct multiclass label using a one vs. all strategy. Therefore,

if you have N classes then there will be N binary classifiers inside

this object. Additionally, this object is linear in the sense that

each of these binary classifiers is a simple linear plane.

[top]nearest_center

This function takes a list of cluster centers and a query vector

and identifies which cluster center is nearest to the query vector.

[top]newman_cluster

This function performs the clustering algorithm described in the paper

Modularity and community structure in networks by M. E. J. Newman.

In particular, this is a method for automatically clustering the nodes in a

graph into groups. The method is able to automatically determine the number

of clusters and does not have any parameters. In general, it is a very good

clustering technique.

[top]normalized_function

This object represents a container for another function

object and an instance of the

vector_normalizer object.

It automatically normalizes all inputs before passing them

off to the contained function object.

C++ Example Programs:

svm_ex.cpp [top]null_trainer_type

This object is a simple tool for turning a

decision_function

(or any object with an interface compatible with decision_function)

into a trainer object that always returns the original decision

function when you try to train with it.

dlib contains a few "training post processing" algorithms (e.g.

reduced and reduced2). These tools

take in a trainer object,

tell it to perform training, and then they take the output decision

function and do some kind of post processing to it. The null_trainer_type

object is useful because you can use it to run an already

learned decision function through the training post processing

algorithms by turning a decision function into a null_trainer_type

and then giving it to a post processor.

[top]offset_kernel

This object represents a kernel with a fixed value offset

added to it.

[top]one_vs_all_decision_function

This object represents a multiclass classifier built out

of a set of binary classifiers. Each binary classifier

is used to vote for the correct multiclass label using a

one vs. all strategy. Therefore, if you have N classes then

there will be N binary classifiers inside this object.

[top]one_vs_all_trainer

This object is a tool for turning a bunch of binary classifiers

into a multiclass classifier. It does this by training the binary

classifiers in a one vs. all fashion. That is, if you have N possible

classes then it trains N binary classifiers which are then used

to vote on the identity of a test sample.

[top]one_vs_one_decision_function

This object represents a multiclass classifier built out

of a set of binary classifiers. Each binary classifier

is used to vote for the correct multiclass label using a

one vs. one strategy. Therefore, if you have N classes then

there will be N*(N-1)/2 binary classifiers inside this object.

C++ Example Programs:

multiclass_classification_ex.cpp,

custom_trainer_ex.cpp [top]one_vs_one_trainer

This object is a tool for turning a bunch of binary classifiers

into a multiclass classifier. It does this by training the binary

classifiers in a one vs. one fashion. That is, if you have N possible

classes then it trains N*(N-1)/2 binary classifiers which are then used

to vote on the identity of a test sample.

C++ Example Programs:

multiclass_classification_ex.cpp,

custom_trainer_ex.cpp [top]pick_initial_centers

This is a function that you can use to seed data clustering algorithms

like the

kkmeans clustering method. What it

does is pick reasonable starting points for clustering by basically

trying to find a set of points that are all far away from each other.

C++ Example Programs:

kkmeans_ex.cpp [top]policy

This is a policy (i.e. a control law) based on a linear function approximator.

You can use a tool like

lspi to learn the parameters

of a policy.

[top]polynomial_kernel

This object represents a polynomial kernel for use with

kernel learning machines.

[top]probabilistic_decision_function

This object represents a binary decision function for use with

kernel-based learning-machines. It returns an

estimate of the probability that a given sample is in the +1 class.

C++ Example Programs:

svm_ex.cpp [top]probabilistic_function

This object represents a binary decision function for use with

any kind of binary classifier. It returns an

estimate of the probability that a given sample is in the +1 class.

[top]process_sample

This object holds a training sample for a reinforcement learning algorithm

(e.g.

lspi).

In particular, it contains a state, action, reward, next state sample from

some process.

[top]projection_function

This object represents a function that takes a data sample and projects

it into kernel feature space. The result is a real valued column vector that

represents a point in a kernel feature space. Instances of

this object are created using the

empirical_kernel_map.

C++ Example Programs:

linear_manifold_regularizer_ex.cpp [top]radial_basis_kernel

This object represents a radial basis function kernel for use with

kernel learning machines.

C++ Example Programs:

svm_ex.cpp [top]randomize_samples

Randomizes the order of samples in a column vector containing sample data.

C++ Example Programs:

svm_ex.cpp [top]ranking_pair

This object is used to contain a ranking example. Therefore, ranking_pair

objects are used to represent training examples for learning-to-rank tasks,

such as those used by the

svm_rank_trainer.

C++ Example Programs:

svm_rank_ex.cppPython Example Programs:

svm_rank.py [top]rank_features

Finds a ranking of the top N (a user supplied parameter) features in a set of data

from a two class classification problem. It

does this by computing the distance between the centroids of both classes in kernel defined

feature space. Good features are then ones that result in the biggest separation between

the two centroids.

C++ Example Programs:

rank_features_ex.cpp [top]rank_unlabeled_training_samples

This routine implements an active learning method for selecting the most

informative data sample to label out of a set of unlabeled samples.

In particular, it implements the MaxMin Margin and Ratio Margin methods

described in the paper:

Support Vector Machine Active Learning with Applications to Text Classification

by Simon Tong and Daphne Koller.

[top]rbf_network_trainer

Trains a radial basis function network and outputs a

decision_function.

This object can be used for either regression or binary classification problems.

It's worth pointing out that this object is essentially an unregularized version

of

kernel ridge regression. This means

you should really prefer to use kernel ridge regression instead.

[top]reduced_decision_function_trainer

This is a batch trainer object that is meant to wrap other batch trainer objects

that create

decision_function objects.

It performs post processing on the output decision_function objects

with the intent of representing the decision_function with fewer

basis vectors.

[top]reduced_decision_function_trainer2

This is a batch trainer object that is meant to wrap other batch trainer objects

that create decision_function objects.

It performs post processing on the output decision_function objects

with the intent of representing the decision_function with fewer

basis vectors.

It begins by performing the same post processing as

the reduced_decision_function_trainer

object but it also performs a global gradient based optimization

to further improve the results. The gradient based optimization is

implemented using the approximate_distance_function routine.

C++ Example Programs:

svm_ex.cpp [top]repeat

This object adds N copies of a computational layer onto a deep neural network.

It is essentially the same as using

add_layer N times,

except that it involves less typing, and for large N, will compile much faster.

C++ Example Programs:

dnn_introduction2_ex.cpp [top]resizable_tensor

This object represents a 4D array of float values, all stored contiguously

in memory. Importantly, it keeps two copies of the floats, one on the host

CPU side and another on the GPU device side. It automatically performs the

necessary host/device transfers to keep these two copies of the data in

sync.

All transfers to the device happen asynchronously with respect to the

default CUDA stream so that CUDA kernel computations can overlap with data

transfers. However, any transfers from the device to the host happen

synchronously in the default CUDA stream. Therefore, you should perform

all your CUDA kernel launches on the default stream so that transfers back

to the host do not happen before the relevant computations have completed.

If DLIB_USE_CUDA is not #defined then this object will not use CUDA at all.

Instead, it will simply store one host side memory block of floats.

Finally, the convention in dlib code is to interpret the tensor as a set of

num_samples() 3D arrays, each of dimension k() by nr() by nc(). Also,

while this class does not specify a memory layout, the convention is to

assume that indexing into an element at coordinates (sample,k,nr,nc) can be

accomplished via:

host()[((sample*t.k() + k)*t.nr() + nr)*t.nc() + nc]

[top]rls

This is an implementation of the linear version of the recursive least

squares algorithm. It accepts training points incrementally and, at

each step, maintains the solution to the following optimization problem:

find w minimizing: 0.5*dot(w,w) + C*sum_i(y_i - trans(x_i)*w)^2

Where (x_i,y_i) are training pairs. x_i is some vector and y_i is a target

scalar value.

[top]roc_c1_trainer

This is a convenience function for creating

roc_trainer_type objects that are

setup to pick a point on the ROC curve with respect to the +1 class.

[top]roc_c2_trainer

This is a convenience function for creating

roc_trainer_type objects that are

setup to pick a point on the ROC curve with respect to the -1 class.

[top]roc_trainer_type

This object is a simple trainer post processor that allows you to

easily adjust the bias term in a trained decision_function object.

That is, this object lets you pick a point on the ROC curve and

it will adjust the bias term appropriately.

So for example, suppose you wanted to set the bias term so that

the accuracy of your decision function on +1 labeled samples was 99%.

To do this you would use an instance of this object declared as follows:

roc_trainer_type<trainer_type>(your_trainer, 0.99, +1);

[top]rr_trainer

Performs linear ridge regression and outputs a decision_function that

represents the learned function. In particular, this object can only be used with

the linear_kernel. It is optimized for the linear case where

the number of features in each sample vector is small (i.e. on the order of 1000 or less since the

algorithm is cubic in the number of features.).

If you want to use a nonlinear kernel then you should use the krr_trainer.

This object is capable of automatically estimating its regularization parameter using

leave-one-out cross-validation.

[top]rvm_regression_trainer

Trains a relevance vector machine for solving regression problems.

Outputs a decision_function that represents the learned

regression function.

The implementation of the RVM training algorithm used by this library is based

on the following paper:

Tipping, M. E. and A. C. Faul (2003). Fast marginal likelihood maximisation

for sparse Bayesian models. In C. M. Bishop and B. J. Frey (Eds.), Proceedings

of the Ninth International Workshop on Artificial Intelligence and Statistics,

Key West, FL, Jan 3-6.

C++ Example Programs:

rvm_regression_ex.cpp [top]rvm_trainer

Trains a relevance vector machine for solving binary classification problems.

Outputs a decision_function that represents the learned classifier.

The implementation of the RVM training algorithm used by this library is based

on the following paper:

Tipping, M. E. and A. C. Faul (2003). Fast marginal likelihood maximisation

for sparse Bayesian models. In C. M. Bishop and B. J. Frey (Eds.), Proceedings

of the Ninth International Workshop on Artificial Intelligence and Statistics,

Key West, FL, Jan 3-6.

C++ Example Programs:

rvm_ex.cpp [top]sammon_projection

This is a function object that computes the Sammon projection of a set

of N points in a L-dimensional vector space onto a d-dimensional space

(d < L), according to the paper:

A Nonlinear Mapping for Data Structure Analysis (1969) by J.W. Sammon

[top]save_image_dataset_metadata

This routine is a tool for saving labeled image metadata to an

XML file. In particular, this routine saves the metadata into a

form which can be read by the

load_image_dataset_metadata

routine. Note also that this is the metadata

format used by the image labeling tool included with dlib in the

tools/imglab folder.

[top]save_libsvm_formatted_data

This is actually a pair of overloaded functions. Between the two of them

they let you save

sparse

or dense data vectors to file using the LIBSVM format.

[top]segment_number_line

This routine clusters real valued scalars in essentially linear time.

It uses a combination of bottom up clustering and a simple greedy scan

to try and find the most compact set of ranges that contain all

given scalar values.

[top]select_all_distinct_labels

This is a function which determines all distinct values present in a

std::vector and returns the result.

[top]sequence_labeler

This object is a tool for doing sequence labeling. In particular,

it is capable of representing sequence labeling models such as

those produced by Hidden Markov SVMs or Conditional Random fields.

See the following papers for an introduction to these techniques:

Hidden Markov Support Vector Machines by

Y. Altun, I. Tsochantaridis, T. Hofmann

Shallow Parsing with Conditional Random Fields by

Fei Sha and Fernando Pereira

C++ Example Programs:

sequence_labeler_ex.cpp [top]sequence_segmenter

This object is a tool for segmenting a sequence of objects into a set of

non-overlapping chunks. An example sequence segmentation task is to take

English sentences and identify all the named entities. In this example,

you would be using a sequence_segmenter to find all the chunks of

contiguous words which refer to proper names.

Internally, the sequence_segmenter uses the BIO (Begin, Inside, Outside) or

BILOU (Begin, Inside, Last, Outside, Unit) sequence tagging model.

Moreover, it is implemented using a sequence_labeler

object and therefore sequence_segmenter objects are examples of

chain structured conditional random field style sequence

taggers.

C++ Example Programs:

sequence_segmenter_ex.cppPython Example Programs:

sequence_segmenter.py,

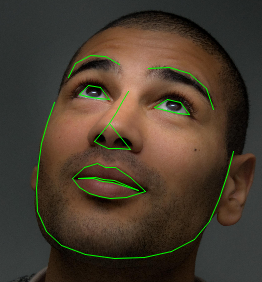

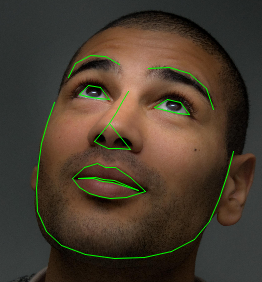

[top]shape_predictor_trainer

This object is a tool for training

shape_predictors

based on annotated training images. Its implementation uses the algorithm described in:

One Millisecond Face Alignment with an Ensemble of Regression Trees

by Vahid Kazemi and Josephine Sullivan, CVPR 2014

It is capable of learning high quality shape models. For example, this is an example output

for one of the faces in the HELEN face dataset:

C++ Example Programs:

train_shape_predictor_ex.cppPython Example Programs:

train_shape_predictor.py [top]sigmoid_kernel

This object represents a sigmoid kernel for use with

kernel learning machines.

[top]simplify_linear_decision_function

This is a set of functions that takes various forms of linear

decision functions

and collapses them down so that they only compute a single dot product when invoked.

[top]sort_basis_vectors

A kernel based learning method ultimately needs to select a set of basis functions

represented by a particular choice of kernel and a set of basis vectors.

sort_basis_vectors() is a function which attempts to perform supervised

basis set selection. In particular, you give it a candidate set of basis

vectors and it sorts them according to how useful they are for solving

a particular decision problem.

[top]sparse_histogram_intersection_kernel

This object represents a histogram intersection kernel kernel for use with

kernel learning machines that operate on

sparse vectors.

[top]sparse_linear_kernel

This object represents a linear kernel for use with

kernel learning machines that operate on

sparse vectors.

[top]sparse_polynomial_kernel

This object represents a polynomial kernel for use with

kernel learning machines that operate on

sparse vectors.

[top]sparse_radial_basis_kernel

This object represents a radial basis function kernel for use with

kernel learning machines that operate on

sparse vectors.

[top]sparse_sigmoid_kernel

This object represents a sigmoid kernel for use with

kernel learning machines that operate on

sparse vectors.

[top]spectral_cluster

This function performs the clustering algorithm described in the paper

On spectral clustering: Analysis and an algorithm by Ng, Jordan, and Weiss.

C++ Example Programs:

kkmeans_ex.cpp [top]structural_assignment_trainer

This object is a tool for learning to solve an assignment problem based

on a training dataset of example assignments. The training procedure produces an

assignment_function object which can be used

to predict the assignments of new data.

Note that this is just a convenience wrapper around the

structural_svm_assignment_problem

to make it look similar to all the other trainers in dlib.

C++ Example Programs:

assignment_learning_ex.cpp [top]structural_graph_labeling_trainer

This object is a tool for learning to solve a graph labeling problem based

on a training dataset of example labeled

graphs.

The training procedure produces a

graph_labeler object

which can be used to predict the labelings of new graphs.

To elaborate, a graph labeling problem is a task to learn a binary classifier which

predicts the label of each node in a graph. Additionally, we have information in

the form of edges between nodes where edges are present when we believe the

linked nodes are likely to have the same label. Therefore, part of a graph labeling

problem is to learn to score each edge in terms of how strongly the edge should enforce

labeling consistency between its two nodes.

Note that this is just a convenience wrapper around the

structural_svm_graph_labeling_problem

to make it look similar to all the other trainers in dlib. You might also

consider reading the book

Structured

Prediction and Learning in Computer Vision by Sebastian

Nowozin and Christoph H. Lampert since it contains a good introduction to machine learning

methods such as the algorithm implemented by the structural_graph_labeling_trainer.

C++ Example Programs:

graph_labeling_ex.cpp [top]structural_sequence_labeling_trainer

This object is a tool for learning to do sequence labeling based

on a set of training data. The training procedure produces a

sequence_labeler object which can

be use to predict the labels of new data sequences.

Note that this is just a convenience wrapper around the

structural_svm_sequence_labeling_problem

to make it look similar to all the other trainers in dlib.

C++ Example Programs:

sequence_labeler_ex.cpp [top]structural_svm_graph_labeling_problem

This object is a tool for learning the weight vectors needed to use

a

graph_labeler object.

It learns the parameter vectors by

formulating the problem as a

structural SVM problem.

[top]structural_svm_problem

This object, when used with the

oca optimizer, is a tool

for solving the optimization problem associated

with a structural support vector machine. A structural SVM is a supervised

machine learning method for learning to predict complex outputs. This is

contrasted with a binary classifier which makes only simple yes/no

predictions. A structural SVM, on the other hand, can learn to predict

complex outputs such as entire parse trees or DNA sequence alignments. To

do this, it learns a function F(x,y) which measures how well a particular

data sample x matches a label y. When used for prediction, the best label

for a new x is given by the y which maximizes F(x,y).

For an introduction to structured support vector machines you should consult

the following paper:

Predicting Structured Objects with Support Vector Machines by

Thorsten Joachims, Thomas Hofmann, Yisong Yue, and Chun-nam Yu

For a more detailed discussion of the particular algorithm implemented by this

object see the following paper:

T. Joachims, T. Finley, Chun-Nam Yu, Cutting-Plane Training of Structural SVMs,

Machine Learning, 77(1):27-59, 2009.

Note that this object is essentially a tool for solving the 1-Slack structural

SVM with margin-rescaling. Specifically, see Algorithm 3 in the above referenced

paper.

Finally, for a very detailed introduction to this subject, you should consider the book:

Structured

Prediction and Learning in Computer Vision by Sebastian Nowozin and

Christoph H. Lampert

C++ Example Programs:

svm_struct_ex.cppPython Example Programs:

svm_struct.py,

[top]structural_svm_problem_threaded

This is just a version of the

structural_svm_problem

which is capable of using multiple cores/threads at a time. You should use it if

you have a multi-core CPU and the separation oracle takes a long time to compute. Or even better, if you

have multiple computers then you can use the

svm_struct_controller_node

to distribute the work across many computers.

C++ Example Programs:

svm_struct_ex.cpp [top]structural_svm_sequence_labeling_problem

This object is a tool for learning the weight vector needed to use

a

sequence_labeler object.

It learns the parameter vector by formulating the problem as a

structural SVM problem.

The general approach is discussed in the paper:

Hidden Markov Support Vector Machines by

Y. Altun, I. Tsochantaridis, T. Hofmann

While the particular optimization strategy used is the method from:

T. Joachims, T. Finley, Chun-Nam Yu, Cutting-Plane Training of

Structural SVMs, Machine Learning, 77(1):27-59, 2009.

[top]structural_track_association_trainer

This object is a tool for learning to solve a track association problem. That

is, it takes in a set of training data and outputs a

track_association_function

you can use to do detection to track association.

C++ Example Programs:

learning_to_track_ex.cpp [top]svm_c_ekm_trainer

This object represents a tool for training the C formulation of

a support vector machine for solving binary classification problems.

It is implemented using the

empirical_kernel_map

to kernelize the

svm_c_linear_trainer. This makes it a very fast algorithm

capable of learning from very large datasets.

[top]svm_c_linear_dcd_trainer

This object represents a tool for training the C formulation of

a support vector machine to solve binary classification problems.

It is optimized for the case where linear kernels are used and

is implemented using the method described in the

following paper:

A Dual Coordinate Descent Method for Large-scale Linear SVM

by Cho-Jui Hsieh, Kai-Wei Chang, and Chih-Jen Lin

This trainer has the ability to disable the bias term and also

to force the last element of the learned weight vector to be 1.

Additionally, it can be warm-started from the solution to a previous

training run.

C++ Example Programs:

one_class_classifiers_ex.cpp [top]svm_c_linear_trainer

This object represents a tool for training the C formulation of

a support vector machine to solve binary classification problems.

It is optimized for the case where linear kernels are used and

is implemented using the

oca

optimizer and uses the exact line search described in the

following paper:

Optimized Cutting Plane Algorithm for Large-Scale Risk Minimization

by Vojtech Franc, Soren Sonnenburg; Journal of Machine Learning

Research, 10(Oct):2157--2192, 2009.

This trainer has the ability to restrict the learned weights to non-negative

values.

C++ Example Programs:

svm_sparse_ex.cpp [top]svm_c_trainer

Trains a C support vector machine for solving binary classification problems

and outputs a decision_function.

It is implemented using the SMO algorithm.

The implementation of the C-SVM training algorithm used by this library is based

on the following paper:

C++ Example Programs:

svm_c_ex.cpp [top]svm_multiclass_linear_trainer

This object represents a tool for training a multiclass support

vector machine. It is optimized for the case where linear kernels

are used and implemented using the

structural_svm_problem

object.

[top]svm_nu_trainer

Trains a nu support vector machine for solving binary classification problems and

outputs a decision_function.

It is implemented using the SMO algorithm.

The implementation of the nu-svm training algorithm used by this library is based

on the following excellent papers:

- Chang and Lin, Training {nu}-Support Vector Classifiers: Theory and Algorithms

- Chih-Chung Chang and Chih-Jen Lin, LIBSVM : a library for support vector

machines, 2001. Software available at

http://www.csie.ntu.edu.tw/~cjlin/libsvm

C++ Example Programs:

svm_ex.cpp,

model_selection_ex.cpp [top]svm_one_class_trainer

Trains a one-class support vector classifier and outputs a decision_function.

It is implemented using the SMO algorithm.

The implementation of the one-class training algorithm used by this library is based

on the following paper:

C++ Example Programs:

one_class_classifiers_ex.cpp [top]svm_pegasos

This object implements an online algorithm for training a support

vector machine for solving binary classification problems.

The implementation of the Pegasos algorithm used by this object is based

on the following excellent paper:

Pegasos: Primal estimated sub-gradient solver for SVM (2007)

by Shai Shalev-Shwartz, Yoram Singer, Nathan Srebro

In ICML

This SVM training algorithm has two interesting properties. First, the

pegasos algorithm itself converges to the solution in an amount of time

unrelated to the size of the training set (in addition to being quite fast

to begin with). This makes it an appropriate algorithm for learning from

very large datasets. Second, this object uses the kcentroid object

to maintain a sparse approximation of the learned decision function.

This means that the number of support vectors in the resulting decision

function is also unrelated to the size of the dataset (in normal SVM

training algorithms, the number of support vectors grows approximately

linearly with the size of the training set).

However, if you are considering using svm_pegasos, you should also try the

svm_c_linear_trainer for linear

kernels or svm_c_ekm_trainer for non-linear

kernels since these other trainers are, usually, faster and easier to use

than svm_pegasos.

C++ Example Programs:

svm_pegasos_ex.cpp,

svm_sparse_ex.cpp [top]svm_rank_trainer

This object represents a tool for training a ranking support vector machine

using linear kernels. In particular, this object is a tool for training

the Ranking SVM described in the paper:

Optimizing Search Engines using Clickthrough Data by Thorsten Joachims

Finally, note that the implementation of this object is done using the

oca optimizer and

count_ranking_inversions method.

This means that it runs in O(n*log(n)) time, making it suitable for use

with large datasets.

C++ Example Programs:

svm_rank_ex.cppPython Example Programs:

svm_rank.py [top]svm_struct_controller_node

This object is a tool for distributing the work involved in solving a

structural_svm_problem across many computers.

[top]svm_struct_processing_node

This object is a tool for distributing the work involved in solving a

structural_svm_problem across many computers.

[top]svr_linear_trainer

This object implements a trainer for performing epsilon-insensitive support

vector regression. It uses the

oca

optimizer so it is very efficient at solving this problem when

linear kernels are used, making it suitable for use with large

datasets.

[top]svr_trainer

This object implements a trainer for performing epsilon-insensitive support

vector regression. It is implemented using the SMO algorithm,

allowing the use of non-linear kernels.

If you are interested in performing support vector regression with a linear kernel and you

have a lot of training data then you should use the svr_linear_trainer

which is highly optimized for this case.

The implementation of the eps-SVR training algorithm used by this object is based

on the following paper:

C++ Example Programs:

svr_ex.cpp [top]test_binary_decision_function

Tests a

decision_function that represents a binary decision function and

returns the test accuracy.

[top]test_graph_labeling_function

Tests a

graph_labeler on a set of data

and returns the fraction of labels predicted correctly.

[top]test_layer

This is a function which tests if a layer object correctly implements

the

documented contract

for a computational layer in a deep neural network.

[top]test_ranking_function

Tests a

decision_function's ability to correctly

rank a dataset and returns the resulting ranking accuracy and mean average precision metrics.

C++ Example Programs:

svm_rank_ex.cppPython Example Programs:

svm_rank.py [top]test_regression_function

Tests a regression function (e.g.

decision_function)

and returns the mean squared error and R-squared value.

[top]test_shape_predictor

Tests a

shape_predictor's ability to correctly

predict the part locations of objects. The output is the average distance (measured in pixels) between

each part and its true location. You can optionally normalize each distance using a

user supplied scale. For example, when performing face landmarking, you might want to

normalize the distances by the interocular distance.

C++ Example Programs:

train_shape_predictor_ex.cppPython Example Programs:

train_shape_predictor.py [top]track_association_function

This object is a tool that helps you implement an object tracker. So for

example, if you wanted to track people moving around in a video then this

object can help. In particular, imagine you have a tool for detecting the

positions of each person in an image. Then you can run this person

detector on the video and at each time step, i.e. at each frame, you get a

set of person detections. However, that by itself doesn't tell you how

many people there are in the video and where they are moving to and from.

To get that information you need to figure out which detections match each

other from frame to frame. This is where the track_association_function

comes in. It performs the detection to track association. It will also do

some of the track management tasks like creating a new track when a

detection doesn't match any of the existing tracks.

Internally, this object is implemented using the

assignment_function object.

In fact, it's really just a thin wrapper around assignment_function and

exists just to provide a more convenient interface to users doing detection

to track association.

C++ Example Programs:

learning_to_track_ex.cpp [top]train_probabilistic_decision_function

Trains a probabilistic_function using

some sort of binary classification trainer object such as the svm_nu_trainer or

krr_trainer.

The probability model is created by using the technique described in the following papers:

Probabilistic Outputs for Support Vector Machines and

Comparisons to Regularized Likelihood Methods by

John C. Platt. March 26, 1999

A Note on Platt's Probabilistic Outputs for Support Vector Machines

by Hsuan-Tien Lin, Chih-Jen Lin, and Ruby C. Weng

C++ Example Programs:

svm_ex.cpp [top]vector_normalizer

This object represents something that can learn to normalize a set

of column vectors. In particular, normalized column vectors should

have zero mean and a variance of one.

C++ Example Programs:

svm_ex.cpp [top]vector_normalizer_frobmetric

This object is a tool for performing the FrobMetric distance metric

learning algorithm described in the following paper:

A Scalable Dual Approach to Semidefinite Metric Learning

By Chunhua Shen, Junae Kim, Lei Wang, in CVPR 2011

Therefore, this object is a tool that takes as input training triplets

(anchor, near, far) of vectors and attempts to learn a linear

transformation T such that:

length(T*anchor-T*near) + 1 < length(T*anchor - T*far)

That is, you give a bunch of anchor vectors and for each anchor vector you

specify some vectors which should be near to it and some that should be far

form it. This object then tries to find a transformation matrix that makes

the "near" vectors close to their anchors while the "far" vectors are

farther away.

[top]vector_normalizer_pca

This object represents something that can learn to normalize a set

of column vectors. In particular, normalized column vectors should

have zero mean and a variance of one.

This object also uses principal component analysis for the purposes

of reducing the number of elements in a vector.

[top]verbose_batch

This is a convenience function for creating

batch_trainer objects. This function

generates a batch_trainer that will print status messages to standard

output so that you can observe the progress of a training algorithm.

C++ Example Programs:

svm_pegasos_ex.cpp [top]verbose_batch_cached

This is a convenience function for creating

batch_trainer objects. This function

generates a batch_trainer that will print status messages to standard

output so that you can observe the progress of a training algorithm.

It will also be configured to use a kernel matrix cache.

Examples: C++

Examples: C++ Examples: Python

Examples: Python