Table of Contents

Note

Codecs mentioned in this section are for encoding and decoding data blocks or row keys. For information about replication codecs, see Preserving Tags During Replication.

Some of the information in this section is pulled from a discussion on the HBase Development mailing list.

HBase supports several different compression algorithms which can be enabled on a ColumnFamily. Data block encoding attempts to limit duplication of information in keys, taking advantage of some of the fundamental designs and patterns of HBase, such as sorted row keys and the schema of a given table. Compressors reduce the size of large, opaque byte arrays in cells, and can significantly reduce the storage space needed to store uncompressed data.

Compressors and data block encoding can be used together on the same ColumnFamily.

Changes Take Effect Upon Compaction. If you change compression or encoding for a ColumnFamily, the changes take effect during compaction.

Some codecs take advantage of capabilities built into Java, such as GZip compression. Others rely on native libraries. Native libraries may be available as part of Hadoop, such as LZ4. In this case, HBase only needs access to the appropriate shared library. Other codecs, such as Google Snappy, need to be installed first. Some codecs are licensed in ways that conflict with HBase's license and cannot be shipped as part of HBase.

This section discusses common codecs that are used and tested with HBase. No matter what codec you use, be sure to test that it is installed correctly and is available on all nodes in your cluster. Extra operational steps may be necessary to be sure that codecs are available on newly-deployed nodes. You can use the Section E.3.1.6, “CompressionTest” utility to check that a given codec is correctly installed.

To configure HBase to use a compressor, see Section E.3.1, “Configure HBase For Compressors”. To enable a compressor for a ColumnFamily, see Section E.3.2, “Enable Compression On a ColumnFamily”. To enable data block encoding for a ColumnFamily, see Section E.4, “Enable Data Block Encoding”.

Block Compressors

none

Snappy

LZO

LZ4

GZ

Data Block Encoding Types

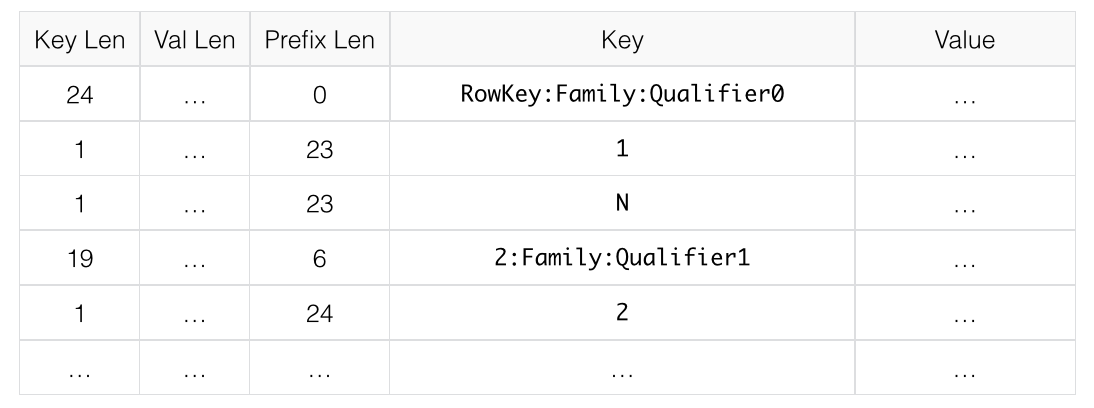

Prefix - Often, keys are very similar. Specifically, keys often share a common prefix and only differ near the end. For instance, one key might be

RowKey:Family:Qualifier0and the next key might beRowKey:Family:Qualifier1. In Prefix encoding, an extra column is added which holds the length of the prefix shared between the current key and the previous key. Assuming the first key here is totally different from the key before, its prefix length is 0. The second key's prefix length is23, since they have the first 23 characters in common.Obviously if the keys tend to have nothing in common, Prefix will not provide much benefit.

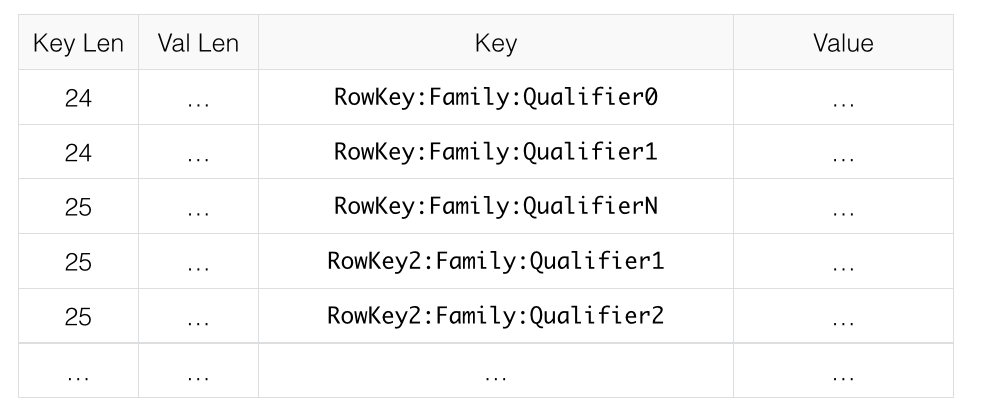

The following image shows a hypothetical ColumnFamily with no data block encoding.

Here is the same data with prefix data encoding.

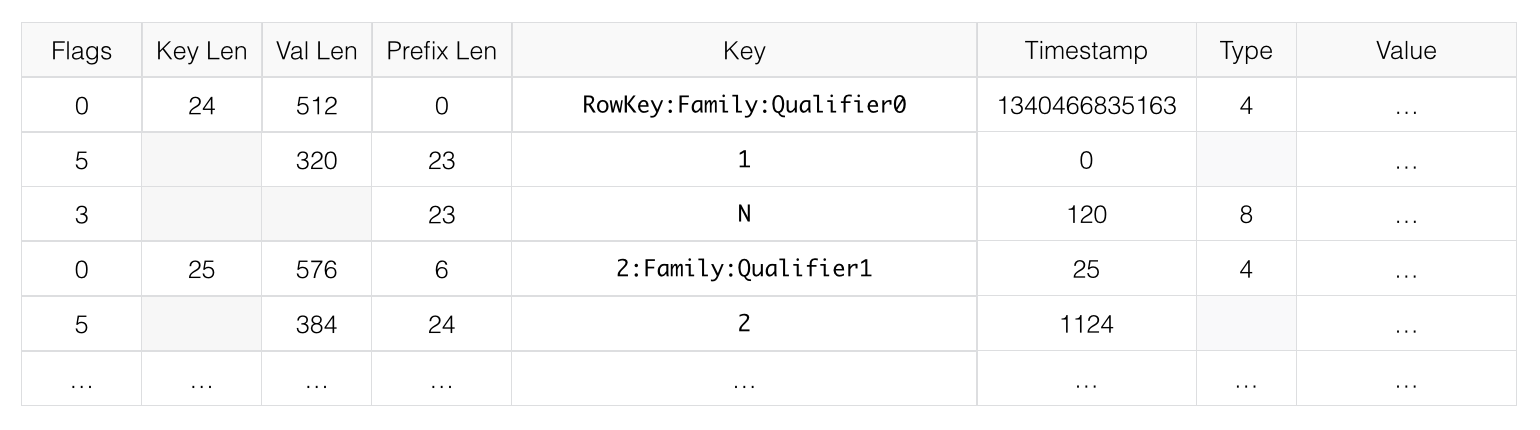

Diff - Diff encoding expands upon Prefix encoding. Instead of considering the key sequentially as a monolithic series of bytes, each key field is split so that each part of the key can be compressed more efficiently. Two new fields are added: timestamp and type. If the ColumnFamily is the same as the previous row, it is omitted from the current row. If the key length, value length or type are the same as the previous row, the field is omitted. In addition, for increased compression, the timestamp is stored as a Diff from the previous row's timestamp, rather than being stored in full. Given the two row keys in the Prefix example, and given an exact match on timestamp and the same type, neither the value length, or type needs to be stored for the second row, and the timestamp value for the second row is just 0, rather than a full timestamp.

Diff encoding is disabled by default because writing and scanning are slower but more data is cached.

This image shows the same ColumnFamily from the previous images, with Diff encoding.

Fast Diff - Fast Diff works similar to Diff, but uses a faster implementation. It also adds another field which stores a single bit to track whether the data itself is the same as the previous row. If it is, the data is not stored again. Fast Diff is the recommended codec to use if you have long keys or many columns. The data format is nearly identical to Diff encoding, so there is not an image to illustrate it.

Prefix Tree encoding was introduced as an experimental feature in HBase 0.96. It provides similar memory savings to the Prefix, Diff, and Fast Diff encoder, but provides faster random access at a cost of slower encoding speed. Prefix Tree may be appropriate for applications that have high block cache hit ratios. It introduces new 'tree' fields for the row and column. The row tree field contains a list of offsets/references corresponding to the cells in that row. This allows for a good deal of compression. For more details about Prefix Tree encoding, see HBASE-4676. It is difficult to graphically illustrate a prefix tree, so no image is included. See the Wikipedia article for Trie for more general information about this data structure.

The compression or codec type to use depends on the characteristics of your data. Choosing the wrong type could cause your data to take more space rather than less, and can have performance implications. In general, you need to weigh your options between smaller size and faster compression/decompression. Following are some general guidelines, expanded from a discussion at Documenting Guidance on compression and codecs.

If you have long keys (compared to the values) or many columns, use a prefix encoder. FAST_DIFF is recommended, as more testing is needed for Prefix Tree encoding.

If the values are large (and not precompressed, such as images), use a data block compressor.

Use GZIP for cold data, which is accessed infrequently. GZIP compression uses more CPU resources than Snappy or LZO, but provides a higher compression ratio.

Use Snappy or LZO for hot data, which is accessed frequently. Snappy and LZO use fewer CPU resources than GZIP, but do not provide as high of a compression ratio.

In most cases, enabling Snappy or LZO by default is a good choice, because they have a low performance overhead and provide space savings.

Before Snappy became available by Google in 2011, LZO was the default. Snappy has similar qualities as LZO but has been shown to perform better.