Latest News

- Spark 2.2.3 released (Jan 11, 2019)

- Spark+AI Summit (April 23-25th, 2019, San Francisco) agenda posted (Dec 19, 2018)

- Spark 2.4.0 released (Nov 02, 2018)

- Spark 2.3.2 released (Sep 24, 2018)

Integrated

Seamlessly mix SQL queries with Spark programs.

Spark SQL lets you query structured data inside Spark programs, using either SQL or a familiar DataFrame API. Usable in Java, Scala, Python and R.

"SELECT * FROM people")

names = results.map(lambda p: p.name)

Uniform Data Access

Connect to any data source the same way.

DataFrames and SQL provide a common way to access a variety of data sources, including Hive, Avro, Parquet, ORC, JSON, and JDBC. You can even join data across these sources.

.registerTempTable("json")

results = spark.sql(

"""SELECT *

FROM people

JOIN json ...""")

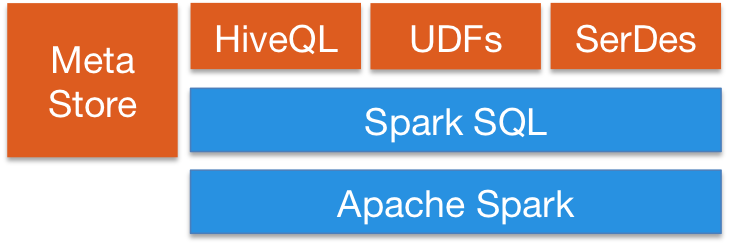

Hive Integration

Run SQL or HiveQL queries on existing warehouses.

Spark SQL supports the HiveQL syntax as well as Hive SerDes and UDFs, allowing you to access existing Hive warehouses.

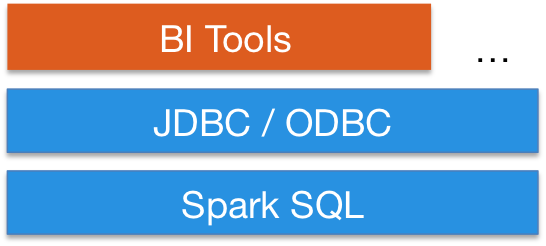

Standard Connectivity

Connect through JDBC or ODBC.

A server mode provides industry standard JDBC and ODBC connectivity for business intelligence tools.

Performance & Scalability

Spark SQL includes a cost-based optimizer, columnar storage and code generation to make queries fast. At the same time, it scales to thousands of nodes and multi hour queries using the Spark engine, which provides full mid-query fault tolerance. Don't worry about using a different engine for historical data.

Community

Spark SQL is developed as part of Apache Spark. It thus gets tested and updated with each Spark release.

If you have questions about the system, ask on the Spark mailing lists.

The Spark SQL developers welcome contributions. If you'd like to help out, read how to contribute to Spark, and send us a patch!

Getting Started

To get started with Spark SQL:

- Download Spark. It includes Spark SQL as a module.

- Read the Spark SQL and DataFrame guide to learn the API.