| Oracle® Database Quality of Service Management User's Guide 11g Release 2 (11.2) Part Number E24611-02 |

|

|

PDF · Mobi · ePub |

| Oracle® Database Quality of Service Management User's Guide 11g Release 2 (11.2) Part Number E24611-02 |

|

|

PDF · Mobi · ePub |

This chapter provides an overview of Oracle Database Quality of Service Management (Oracle Database QoS Management). This chapter includes the following sections:

Many companies are consolidating and standardizing their data center computer systems. Instead of using individual servers for each application, they run multiple applications on clustered databases. Also, the migration of applications to the Internet has introduced the problem of managing an open workload. With an open workload comes a new type of application failure that is caused by demand surges that cannot be fully anticipated or planned for. To keep applications available and performing within their target service levels in this type of environment, you must pool resources, have management tools that detect performance bottlenecks in real time, and reallocate resources to meet the change in demand.

Oracle Database QoS Management is an automated, policy-based product that monitors the workload requests for an entire system. Oracle Database QoS Management manages the resources that are shared across applications and adjusts the system configuration to keep the applications running at the performance levels needed by your business. Oracle Database QoS Management responds gracefully to changes in system configuration and demand, thus avoiding additional oscillations in the performance levels of your applications.

Oracle Database QoS Management monitors the performance of each work request on a target system. Oracle Database QoS Management starts to track a work request from the time a work request requests a connection to the database using a database service. The amount of time required to complete a work request, or the response time (also known as the end-to-end response time, or round-trip time), is the time from when the request for data was initiated and when the data request is completed. By accurately measuring the two components of response time, which are the time spent using resources and the time spent waiting to use resources, Oracle Database QoS Management can quickly detect bottlenecks in the system. Oracle Database QoS Management then makes suggestions to reallocate resources to relieve a bottleneck, thus preserving or restoring service levels.

Oracle Database QoS Management manages the resources on your system so that:

When sufficient resources are available to meet the demand, business-level performance requirements for your applications are met, even if the workload changes.

When sufficient resources are not available to meet the demand, Oracle Database QoS Management attempts to satisfy the more critical business performance requirements at the expense of less critical performance requirements.

In a typical company, when the response times of your applications are not within acceptable levels, problem resolution can be very slow. Often, the first questions that administrators ask are: "Did we configure the system correctly? Is there a parameter change that fixes the problem? Do we need more hardware?" Unfortunately, these questions are very difficult to answer precisely; the result is often hours of unproductive and frustrating experimentation.

Oracle Database QoS Management provides the following benefits:

Reduces the time and expertise requirements for system administrators who manage Oracle Real Application Clusters (Oracle RAC) resources

Helps reduce the number of performance outages

Reduces the time needed to resolve problems that limit or decrease the performance of your applications

Provides stability to the system as the workloads change

Makes the addition or removal of servers transparent to applications

Reduces the impact on the system caused by server failures

Helps ensure that service-level agreements (SLAs) are met

Enables more effective sharing of hardware resources

Oracle Database QoS Management helps manage the resources that are shared by applications in a cluster. Oracle Database QoS Management can help identify and resolve performance bottlenecks. Oracle Database QoS Management does not diagnose or tune application or database performance issues. When tuning the performance of your applications, the goal is to achieve optimal performance. Oracle Database QoS Management does not seek to make your applications run faster, but instead works to remove obstacles that prevent your applications from running at their optimal performance levels.

This section provides a basic description of how Oracle Database QoS Management works and evaluates the performance of workloads on your system.

This section contains the following topics:

In previous database releases, you could use services to manage the workload on your system by starting services on groups of servers that were dedicated to particular workloads. At the database tier, for example, a group of servers might be dedicated to online transaction processing (OLTP), while another group of servers is dedicated to application testing, and a third group is used for internal applications. The system administrator can allocate resources to specific workloads by manually changing the number of servers on which a database service is allowed to run. The workloads are isolated from each other to prevent demand surges, failures, and other problems in one workload from affecting the other workloads. In this type of deployment, each workload must be separately provisioned for peak demand because resources are not shared.

The basic steps performed by Oracle Database QoS Management are as follows:

Uses a policy created by the QoS administrator to:

Each work request is assigned to a Performance Class by using the attributes of the incoming work requests (such as the database service to which the application connects).

Determine the target response times (Performance Objectives) for each Performance Class.

Determine which Performance Classes are the most critical to your business

Monitors the resource usage and resource wait times for all the Performance Classes.

Analyzes the average response time for a Performance Class against the Performance Objective in effect for that Performance Class

Produces recommendations for reallocating resources to improve the performance of a Performance Class that is exceeding its target response time and provides an analysis of the predicted impact to performance levels for each Performance Class if that recommendation is implemented.

Implements the actions listed in the recommendation when directed to by the Oracle Database QoS Management administrator and then evaluates the system to verify that each Performance Class is meeting its Performance Objective after the resources have been reallocated.

Caution:

By default, any named user may create a server pool. To restrict the operating system users that have this privilege, Oracle strongly recommends that you add specific users to the CRS Administrators list. See Oracle Clusterware Administration and Deployment Guide for more information about adding users to the CRS Administrators list.Starting with Oracle Database 11g release 2, you can use server pools to create groups of servers within a cluster to provide workload isolation. A server can only belong to one server pool at any time. Databases can be created in a single server pool, or across multiple server pools. Services Oracle Database QoS Management can make recommendations to move a server from one server pool to another based on the measured and projected demand, and to satisfy the Performance Objectives currently in effect.

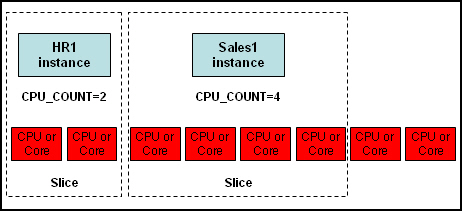

When multiple database instances share a single server, they must share its CPU, memory, and I/O bandwidth. Instance Caging limits the amount of CPU an Oracle database instance consumes, using the Oracle Database Resource Manager and the CPU_COUNT database initialization parameter. Oracle Database QoS Management requires the sum of the values for CPU_COUNT for all instances on the server to not exceed the total number of physical CPUs. Also, each CPU partition, or slice, must be uniform in thickness (number of CPUs) for each instance of a database within a server pool.

Figure 1-1 Instance Caging and CPU Slices

When you implement instance caging, Oracle Database QoS Management can provide recommendations to reallocate CPU resources from one slice to another slice within the same server pool. If you choose to implement the recommendation to modify the instance caging settings, then Oracle Database QoS Management modifies the CPU_COUNT parameter uniformly for all the database instances running on servers in the server pool.

Modifying the CPU_COUNT parameter and configuring Oracle Database QoS Management so that a Resource Plan is activated enables the Instance Caging feature. When you use Instance Caging to constrain CPU usage for an instance, that instance might become CPU-bound. This is when the Resource Manager begins to do its work, allocating CPU shares among the various database sessions according to the active resource plan.

In an Oracle RAC cluster, Oracle Database QoS Management monitors the server pools and nodes on which the database services are offered. A service can run in only one server pool. If the database spans multiple server pools, then you must create multiple services to access the instances in all server pools.

Workload is monitored for clients and applications that connect to the database using database services that are managed by Oracle Clusterware. The connections must use Java Database Connectivity (JDBC) (thick or thin), or Oracle Call Interface (OCI). Connections should use services with its run-time goal for the load balancing advisory set to SERVICE_TIME (using Server Control (SRVCTL) and the -B option) and the connection load balancing goal set to LONG (using SRVCTL and the -j option), for example:

srvctl modify service -d myracdb -s sales_cart -B SERVICE_TIME -j LONG

Oracle Database QoS Management requires the database services to be configured with the following options:

If the server pool that the service runs in has a maximum size greater than 1 (or UNLIMITED), then set the cardinality of the service (-c) to UNIFORM.

If the server pool that the service runs in has a maximum size of 1, then set the cardinality of the service (-c) to SINGLETON.

The central concept in Oracle Database QoS Management is the Policy Set. A Policy Set enables you to specify your resources, Performance Classes (workloads), and one or more Performance Policies that specify the Performance Objective for each Performance Class. A Policy Set can also specify constraints for resource availability. The Performance Policies used by Oracle Database QoS Management are implemented system-wide. These policies are used to manage the availability of resources for each Performance Class so that the Performance Objectives specified in the Performance Policy are satisfied.

Oracle Database QoS Management provides default classification rules and associated Performance Class names when a new Default Policy Set is created using Oracle Enterprise Manager. For example, all database services in a cluster are discovered when an initial Policy Set is created. A Performance Class for each of these services is created. The Performance Class is named by appending _pc to the service name, for example, sales_pc.

Only one Performance Policy in the Policy Set can be active at any time. Performance policies can be activated based upon a calendar schedule, maintenance windows, events, and so on. For more information about Performance Policies, see "Overview of Performance Policies and Performance Objectives".

When you create a Policy Set, you specify which server pools in the cluster should be managed by Oracle Database QoS Management. You also define Performance Classes (used to categorize workloads with similar performance requirements). You then create a Performance Policy to specify which Performance Classes have the highest priority and the Performance Objectives of each Performance Class. To satisfy the Performance Objectives, Oracle Database QoS Management makes recommendations for reallocating resources when needed and predicts what effect the recommended actions will have on the ability of each Performance Class to meet its Performance Objective.

For example, you might create a policy to manage your application workloads during business hours. The applications used by customers to buy products or services are of the highest priority to your business during this time. You also give high priority to order fulfillment and billing applications. Human resource and enterprise resource planning (ERP) applications are of a lower priority during this time. If your online sales applications experience a surge in demand, then when Oracle Database QoS Management recommends that more resources be allocated to the sales applications and taken away from applications of lesser importance, the recommendation also includes a prediction of the change in performance (positive or negative) for each Performance Class. See "Overview of Recommendations" for more information about recommendations.

A Policy Set, as shown in Figure 1-2, consists of the following:

The server pools that are being managed by Oracle Database QoS Management

Performance Classes, which are groups of work requests with similar performance objectives

Performance policies, which describe how resources should be allocated to the Performance Classes by using:

Figure 1-2 Elements of an Oracle Database QoS Management Policy Set

When deciding how many clusters to create for your business, you need to compare the possible cost savings through consolidation of servers with the risk that the consolidated workloads will interfere with each other in some significant way. With the introduction of server pools to logically divide a cluster, you can achieve the benefit of physical consolidation and resource agility while maintaining workload isolation.

Caution:

By default, any named user may create a server pool. To restrict the operating system users that have this privilege, Oracle strongly recommends that you add specific users to the CRS Administrators list. See Oracle Clusterware Administration and Deployment Guide for more information about adding users to the CRS Administrators list.As the administrator, you can define the workloads that can run in various server pools, as shown in Figure 1-3. Applications that connect to your Oracle RAC database use a service that runs only on the servers currently allocated to that server pool. For example, considering the example illustrated in Figure 1-3, connections and applications that use the CS service access only the servers in the HR server pool, so that work done by those connections does not interfere with the applications using the Sales service. Oracle Database QoS Management can assist you in managing the resource allocations within each of those groups to meet your service levels and in automatically redistributing resources to meet changes in your business requirements.

Figure 1-3 Diagram of Server Pools, Oracle Databases, and Database Services

With server pools, you can now create groups of servers that can be managed as a single entity. Databases can be created to run in these server pools. If each server runs only a single instance of a database, then if the database needs more resources, an additional server can be allocated to the server pool. If multiple database instances run on a single server, they must compete for the shared resources of that server, such as memory and CPU. If one of the database instances is experiencing a much higher workload than the other instances, that database instance can significantly degrade the performance of the other instances running on the same server.

You can use Instance Caging to limit the amount of CPU an Oracle database instance consumes. By setting the CPU_COUNT parameter to limit the maximum number of CPUs an instance can use, you partition the CPUs among the database instances on a server, thus preventing them from using excessive amounts of CPU resources. The CPU_COUNT setting must be the same for each instance of a database within a server pool. Oracle Database QoS Management can monitor the CPU usage among all the database instances in the server pool and recommend changes to the current settings if needed.

Instead of creating multiple server pools and moving resources among the different pools, why not just have all servers in a single server pool? There are a few reasons, for example:

Different types of workloads require different configurations and have different tuning goals. For example, a customer who is using your OLTP applications to purchase goods or services expects the shipping and payment information screens to have a quick respond time. If you application takes too long to process the order for the customer, then they might lose interest and your company loses a sale. By contrast, an employee that is accessing an internal HR application will most likely not quit their job if the application takes longer than expected for an online task to complete.

Applications can have various resource requirements throughout the day, week, or month to meet their business objectives. Use server pools to divide the resources among the application workloads and server pool directive overrides in a Performance Policy to change the default attributes (such as Max or Min) for a server pool to meet the Performance Objectives of a given time period.

For example, an online tax service must make sure to have the tax statements prepared and filed for its customers by the government-specified deadline. In the time frame immediately preceding a filing deadline, applications related to tax statement preparation and filing need more resources than they do at other times of the year. You might create a Performance Policy named QuarterlyFilings and specify that when this Performance Policy is active, the server pool used by the tax preparation applications should have a minimum of four servers instead of two to handle the additional workload. When the QuarterlyFilings Performance Policy is not in effect, a server pool directive override is not used for the tax preparation server pool, so the minimum number of servers in that server pool is two.

By regulating the number of servers that host a workload, application users experience a consistent level of performance, even in the presence of changing demand levels. This prevents performance expectations of your customers from being reset when workload levels change from low to high demand.

For example, if a new consumer product is in high demand, and your company advertises that they have large quantities of that product for sale at a reduced price, then the number of transactions processed by your OLTP applications increases rapidly (a demand surge occurs) when many new customers create orders in your system. Customers in your existing customer base who are not interested in the new product would not want their online shopping experience to be impacted by the flood of new customers. Also, if your OLTP application is not able to accept all the incoming orders, then some of the new customers might decide to place their order with a different company or to visit a retail store.

Oracle Database QoS Management helps you to manage the reallocation of available resources to meet the demand surge without sacrificing the quality of service of your other applications.

Some workloads do not scale well but still benefit from the high availability of a cluster environment. Deploying these workloads in a fixed-size server pool offers both performance manageability and high availability.

For example, you might run an ERP application in a server pool with a fixed size of one server. This means the maximum size of the server pool and the minimum size of the server pool are both set to one. If a server that is allocated to that server pool fails, then Oracle Clusterware automatically allocates a new server to that server pool so that the minimum size is maintained. Any instances and services that were running on the shut down server are started on the new server and your application remain available.

See Also:

Oracle Clusterware Administration and Deployment Guide for more information about server pools

Oracle Database Concepts for a description of an OLTP system

Caution:

By default, any named user may create a server pool. To restrict the operating system users that have this privilege, Oracle strongly recommends that you add specific users to the CRS Administrators list. See Oracle Clusterware Administration and Deployment Guide for more information about adding users to the CRS Administrators list.When you first install Oracle Grid Infrastructure for a cluster, a default server pool (the Free pool) is created. All servers are initially placed in this server pool. You should create one or more server pools depending on the workloads that need to be managed. When you create a new server pool, the servers that you assign to that server pool are automatically moved out of the Free pool and placed in the newly created server pool. At this point, you can install a database to run in that server pool and create database services that are managed by Oracle Clusterware for applications to connect to that database.

For an Oracle RAC database to take advantage of the flexibility of server pools, the database must be created using the policy-managed deployment option, which places the database in one or more server pools. Upgraded Oracle databases are converted directly to administrator-managed databases and must be separately migrated to policy-managed databases. See Oracle Real Application Clusters Administration and Deployment Guide for more information about changing an administrator-managed database to a policy-managed database.

Caution:

Oracle Database QoS Management does not support candidate server lists; do not create server pools with candidate server lists (using theserver_names attribute). The use of candidate server lists is primarily for server pools hosting third-party applications, not Oracle databases.See Also:

Oracle Real Application Clusters Installation Guide for Linux and UNIX (or other platform) for more information about creating a policy-managed database

As mentioned previously, when you create a Policy Set, you define the Performance Objectives for various Performance Classes, or workloads, that run on your cluster. In order to determine which Performance Class a work request belongs to, a set of classification rules is evaluated against the work requests when they are first detected by the cluster. These rules enable value matching against attributes of the work request; when there is a match between the type of work request and the criteria for inclusion in a Performance class, the work request is classified into that Performance Class. The fundamental classifier used to assign work requests to Performance Classes is the name of the service that is used to connect to the database.

This section contains the following topics:

The classification of work requests applies a user-defined name (tag) that identifies the Performance Class to which the work request belongs. All work requests that are grouped into a particular Performance Class have the same performance objectives. In effect, the tag connects the work request to the Performance Objective for the associated Performance Class. Tags are permanently assigned to each work request so that every component of the system can take measurements and provide data to Oracle Database QoS Management for evaluation against the applicable Performance Objectives.

Classification occurs wherever new work enters the system. When a work request arrives at a server, the work request is checked for a tag. If the work request has a tag, then the server concludes that this work request has already been classified, and the tag is not changed. If the work request does not include a tag, then the classifiers are checked, and a tag for the matching Performance Class is attached to the work request.

To illustrate how work requests are classified, consider an application that connects to an Oracle RAC database. The application uses the database service sales. The Oracle Database QoS Management administrator specified during the initial configuration of Oracle Database QoS Management that the sales_pc Performance Class should contain work requests that use the sales service. When a connection request is received by the database, Oracle Database QoS Management checks for a tag. If a tag is not found, then Oracle Database QoS Management compares the information in the connection request with the classifiers specified for each Performance Class, in the order specified in the Performance Policy. If the connection request being classified is using the sales service, then when the classifiers in the sales_pc Performance Class are compared to the connection request information, a match is found and the database work request is assigned a tag for the sales_pc Performance Class.

A single application can support work requests of many types, with a range of performance characteristics. By extending and refining the default classification rules, the Oracle Database QoS Management administrator can write multiple Performance Objectives for a single application. For example, the administrator might decide that a web-based application should have separate Performance Objectives for work requests related to logging in, browsing, searching, and purchasing.

Oracle Database QoS Management supports user-defined combinations of connection parameters to map Performance Classes to the actual workloads running in the database. These connection parameters belong to two general classes and can be combined to create fine-grained Boolean expressions:

Configuration Parameters—The supported configuration parameters are SERVICE_NAME and USERNAME. Each classifier in a Performance Class must specify the name of a database service. Additional granularity can be achieved by identifying the name of the user that is making the database connection from either a client or the middle tier. The advantage of using these classifiers is that they do not require application code changes to associate different workloads with separate Performance Classes.

Application Parameters—The supported application parameters are MODULE, ACTION, and PROGRAM. These are optional parameters. The values for MODULE and ACTION must be set within the application. Depending on the type of application, you can set these parameters as follows:

The PROGRAM parameter is set or derived differently for each database driver and platform. Consult the appropriate Oracle Database developer's guide for further details and examples.

See Also:

To manage the workload for an application, the application code makes database connections using a particular service. To provide more precise control over the workload generated by various parts of the application, you can create additional Performance Classes and use classifiers that include PROGRAM, MODULE, or ACTION in addition to the service or user name. For example, you could specify that all connections to your cluster that use the sales service belong to the sales_pc Performance Class, but connections that use the sales service and have a user name of APPADMIN belong to sales_admin Performance Class.

The Performance Classes in use at a particular data center are expected to change over time. For example, you might need to modify the Performance Objectives for one part of your application. In this case you would create a new Performance Class with additional classifiers to identify the target work requests, and update your Performance Policy to add a new Performance Objective for this Performance Class. In other words, you replace a single Performance Objective with one or more finer-grained Performance Objectives and divide the work requests for one Performance Class into multiple Performance Classes.

Application developers can suggest which Performance Classes to use. Specifically, an application developer can suggest ways to identify different application workloads, and you can use these suggestions to create classifiers for Performance Classes so that each type of work request is managed separately.

You might need to create additional Performance Classes so you can specify the acceptable response times for the different application workloads. For example, a Performance Objective may indicate that a work request performing the checkout action for the sales_pc_checkout Performance Class should not take more than one millisecond to complete and a work request performing the browse action for the sales_pc_browse Performance Class can take 100 milliseconds second to complete.

See Also:

"Managing Performance Classes"To manage the various Performance Objectives, you define one or more Performance Policies. A Performance Policy is a collection of Performance Objectives and a measure of how critical they are to your business. For example, you might define a Performance Policy for normal business hours, another for weekday nonbusiness hours, one for weekend operations, and another to be used during processing for the quarter-end financial closing. At any given time, a single Performance Policy is in effect as specified by the Oracle Database QoS Management administrator. Within each Performance Policy, the criticalness, or ranking, of the Performance Objectives can be different, enabling you to give more priority to certain workloads during specific time periods.

A Performance Policy has a collection of Performance Objectives in effect at the same time; there is one or more Performance Objectives for each application or workload that runs on the cluster. Some workloads and their Performance Objectives are more critical to the business than others. Some Performance Objectives might be more critical at certain times, and less critical at other times.

The following topics describe the components of a Performance Policy:

You create Performance Objectives for each Performance Class to specify the target performance level for all work requests that are assigned to each Performance Class. A Performance Objective specifies both a business requirement (the target performance level) and the work to which that Performance Objective applies (the Performance Class). For example, a Performance Objective might specify that work requests in the hr_pc Performance Class should have an average response time of less than 0.2 seconds.

Performance Objectives are specified with Performance Policies. Each Performance Policy includes a Performance Objective for each and every Performance Class, unless the Performance Class is marked Measure-Only. In this release, Oracle Database QoS Management supports only one type of Performance Objective, average response time.

The response time for a workload is based upon database client requests. Response time measures the time from when the cluster receives the request over the network to the time the request leaves the cluster. Response time does not include the time required to send the information over the network to or from the client. The response time for all database client requests in a Performance Class is averaged and presented as average response time, measured as database requests for second.

A Performance Policy can also include a set of server pool directive overrides. A server pool directive override sets the availability properties of Min, Max, and Importance for a server pool when the Performance Policy is in effect. Server pool directive overrides serve as constraints on the allocation changes that Oracle Database QoS Management recommends, because the server pool directive overrides are honored during the activation period of the Performance Policy. For example, Oracle Database QoS Management never recommends moving a server out of a server pool if doing so results in the server pool having less than its specified minimum number of servers.

You might create Performance Policies for your system to manage workload based on the time of year or time of day, as shown in Figure 1-5. Under normal conditions, these Performance Policies keep your database workload running at a steady rate. If the workload requests for a database increase suddenly, then a particular server pool might require additional resources beyond what is specified by the Performance Policy.

Figure 1-5 Baseline Resource Management by Performance Policy

For example, assume your business takes orders over the telephone and creates orders using a sales application. Your telephone sales department is only open during regular business hours, but customers can also place orders themselves over the internet. During the day, more orders are placed so the sales applications need more resources to handle the workload. This configuration is managed by creating the Business Hours Performance Policy, and specifying that the Back Office server pool can have a maximum of two servers, enabling Oracle Database QoS Management to move servers to the Online server pool, as needed. After the telephone sales department closes, the workload for the sales applications decreases. To manage this configuration you create the After Hours Performance Policy and specify that the Back Office server pool can have a maximum of four servers, enabling your internal applications to acquire the additional resources that they need to complete their workloads before the next business day.

In this scenario, the Business Hours and After Hours Performance Policies might contain server pool directive overrides. When a Performance Policy contains a server pool directive override, the current settings of Max, Min, and Importance for the specified server pool are overridden while that Performance Policy is in effect. This enables additional servers to be placed in the Sales server pool to give the online sales applications the resources they need and to limit the resources used by the Back Office server pool, so that its workload does not interfere with the Sales workload.

Within a Performance Policy, you can also assign a level of business criticalness (a rank) to each Performance Class to give priority to meeting the Performance Objectives for a more critical Performance Class over a less critical one. When there are not enough resources available to meet all the Performance Objectives for all Performance Classes at the same time, the Performance Objectives for the more critical Performance Classes must be met at the expense of the less critical Performance Objectives. The Performance Policy specifies the business criticalness of each Performance Class, which can be Highest, High, Medium, Low, or Lowest.

For example, using the Performance Policies illustrated in Figure 1-5, when the Business Hours Performance Policy is in effect, the sales applications, which access the Online server pool, have the highest rank. If there are not enough resources available to meet the Performance Objectives of all the Performance Classes, then the applications that use the Online server pool will get priority access to any available resources, even if the applications using the Back Office server pool are not meeting their Performance Objectives.

You can have multiple Performance Classes at the same rank. If Oracle Database QoS Management detects more than one Performance Class not meeting its Performance Objective and the Performance Classes are assigned the same rank in the active Performance Policy, then Oracle Database QoS Management recommends a change to give the Performance Class closest to meeting its Performance Objective more resources. After implementing the recommended action, when the Performance Class is no longer below its target performance level, Oracle Database QoS Management performs a new evaluation of the system performance.

See Also:

"Managing Performance Policies"The Oracle Database QoS Management Server retrieves metrics data from each database instance running in managed server pools. The data is correlated by Performance Class every five seconds. The data includes many metrics such as database request arrival rate, CPU use, CPU wait time, I/O use, I/O wait time, Global Cache use and Global Cache wait times. Information about the current topology of the cluster and the health of the servers is added to the data. The Policy and Performance Management engine of Oracle Database QoS Management (illustrated in Figure 1-6) analyzes the data to determine the overall performance profile of the system with regard to the current Performance Objectives established by the active Performance Policy.

The performance evaluation occurs once a minute and results in a recommendation if any Performance Class does not meet its objectives. The recommendation specifies which resource is the bottleneck. Specific corrective actions are included in the recommendation, if possible. The recommendation also includes a listing of the projected impact on all Performance Classes in the system if you decide to implement the recommended action.

Figure 1-6 diagrams the collection of data from various data sources and shows how that information is used by Oracle Enterprise Manager. In this figure, CHM refers to Oracle Cluster Health Monitor and Server Manager (SRVM) is a component of Oracle Clusterware.

Figure 1-6 Diagram of Oracle Database QoS Management Server Architecture

If your business experiences periodic demand surges or must support an open workload, then to retain performance levels for your applications you can design your system to satisfy the peak workload. Creating a system capable of handling the peak workload typically means acquiring additional hardware to be available when needed and sit idle when not needed. Instead of having servers remain idle except when a demand surge occurs, you might decide to use those servers to run other application workloads. However, if the servers are busy running other applications when a demand surge hits, then your system might not be able to satisfy the peak workload and your main business applications do not perform as expected. Oracle Database QoS Management enables you to manage excess capacity to meet specific performance goals through its recommendations.

This section contains the following topics:

When you use Oracle Database QoS Management, your system is continuously monitored in an iterative process to see if the Performance Objectives in the active Performance Policy are being met. Performance data is sent to Oracle Enterprise Manager for display in the Oracle Database QoS Management Dashboard (the Dashboard) and Performance History pages.

When one or more Performance Objectives are not being met, after evaluating the performance of your system, Oracle Database QoS Management seeks to improve the performance of a single Performance Objective: usually the highest ranked Performance Objective that is currently not being satisfied. If all Performance Objectives are satisfied with capacity to spare for both the current and projected workload, then Oracle Database QoS Management signals "No action required: all Performance Objectives are being met."

If Performance Objectives are not being met for a Performance Class, then Oracle Database QoS Management issues recommendations to rebalance the use of resources to alleviate bottlenecks. Oracle Database QoS Management evaluates several possible solutions and then chooses the solution that:

Offers the best overall system improvement

Causes the least system disruption

Helps the highest ranked violating performance class

The types of recommendations that Oracle Database QoS Management can make are:

If Performance Objectives are not being met for a Performance Class, and the Performance Class accesses the same database as other Performance Classes, then Oracle Database QoS Management can recommend consumer group mapping changes. Changing the consumer group mappings gives more access to the CPU resource to the Performance Class that is not meeting is Performance Objective. Oracle Database QoS Management issues consumer group mapping recommendations only for Performance Classes that are competing for resources in the same database and server pool.

If you have multiple database instances running on servers in a server pool, Oracle Database QoS Management can recommend that CPU resources used by a database instance in one slice on the server be donated to a slice that needs more CPU resources. If there is a Performance Class that is not meeting its Performance Objective, and there is another slice on the system that has available headroom, or the Performance Classes that use that slice are of a lower rank, then Oracle Database QoS Management can recommend moving a CPU from the idle slice to the overloaded slice. If this recommendation is implemented, then the CPU_COUNT parameter is adjusted downwards for the idle instance and upwards for the overworked instance on all servers in the server pool.

Another recommended action that Oracle Database QoS Management can display is to move a server from one server pool to another to provide additional resources to meet the Performance Objectives for a Performance Class. If all the server pools in the cluster are at their specified minimum size, or if the server pool needing the resource is at its maximum size, then Oracle Database QoS Management can no longer recommend removing servers from server pools. In this situation the Dashboard displays "No recommended action at this time."

The minimum size of a server pool is the number of servers that that server pool is required to have. If you add the values for the server pool minimum attribute for each server pool in your cluster, then the difference between this sum and the total number of servers in the cluster represents shared servers that can move between server pools (or float) to meet changes in demand. For example, if your cluster has 10 servers and two server pools, and each server pool has a minimum size of four, then your system has two servers that can be moved between server pools. These servers can be moved if the target server pool has not reached its maximum size. Oracle Database QoS Management always honors the Min and Max size constraints set in a policy when making Move Server recommendations.

If you set the minimum size of a server pool to zero and your system experiences a demand surge, then Oracle Database QoS Management can recommend moving all the servers out of that server pool so that the server pool is at its minimum size. This results in the Performance Classes that use that server pool being completely starved of resources, and essentially being shut down. A server pool with a minimum size of zero should only host applications that are of low business criticalness and Performance Classes that are assigned a low rank in the Performance Policy.

When trying to relieve a resource bottleneck for a particular Performance Class, Oracle Database QoS Management recommends adding more of the resource (such as CPU time) for that Performance Class or making the resource available more quickly to work requests in the Performance Class. The recommendations take the form of promoting the target Performance Class to a higher Consumer Group, demoting competing Performance Classes within the resource plan, adjusting CPU resources shared between different slices in a server pool, or moving servers between server pools.

Implementing a recommended action makes the resource less available to other Performance Classes. When generating recommendations, Oracle Database QoS Management evaluates the impact to system performance as a whole. If a possible recommendation for changing the allocation of resources provides a small improvement in the response time of one Performance Class, but results in a large decrease in the response time of another Performance Class, then Oracle Database QoS Management reports that the performance gain is too small, and the change is not recommended.

Oracle Database QoS Management can issue recommendations that involve a negative impact to the performance of a Performance Class if:

The negative impact on the Performance Class from which the resource is taken is projected not to cause a Performance Objective violation and a positive impact is projected for the Performance Class that gets better access to resources

The Performance Class from which the resource is taken is lower ranked, and thus less critical to your business, than the Performance Class being helped

If the resource bottleneck can be resolved in multiple ways, then Oracle Database QoS Management recommends an action that is projected to improve the performance of the highest ranked Performance Class that is violating its objective. You can also view the alternative recommendations generated by Oracle Database QoS Management and see whether the action was recommended for implementation. For example, one possible solution to resolving a bottleneck on the CPU resource is to demote the Consumer Group associated with the Performance Class that is using the CPU the most. By limiting access to the CPU for the work requests in this Performance Class, the work requests in the other Performances Classes for that database get a larger share of the CPU time. However, Oracle Database QoS Management might decide not to recommend this action because the gain in response time for the target Performance Class is too small.

The analysis data for a recommendation includes the projected change in response time for each Performance Class, the projected change in the Performance Satisfaction Metric (PSM) for each Performance Class, and the reason this action is chosen among other alternative actions, as shown in Figure 1-7. In this example, if you implement the recommended action, then Oracle Database QoS Management predicts that the sales cart Performance Class, which has the highest ranking, will have an improvement in response time from 0.00510 seconds for database requests to 0.00426 seconds, which equates to an 11.6% gain in its PSM. The other Performance Classes are not effected by the change because they use a different server pool.

Figure 1-7 Example of the Analysis for a Recommended Action

Oracle Database QoS Management does not implement the recommendations automatically, however, you can configure EM to generate alerts based upon the duration that a Performance Class has not been meeting its objective. After the Oracle Database QoS Management administrator implements a recommendation, the system performance is reevaluated for the specified settling time before any new recommendations are made.

Consider a system that has two servers in an Online server pool, and two servers in a Back Office server pool. The Online server pool hosts two workloads: the sales_pc Performance Class and the sales_cart Performance Class. The minimum size of the Online server pool is two. The Back Office server pool hosts two internal applications: a human resources (HR) application and an enterprise resource planning (ERP) application. The Back Office server pool has a minimum size of one. The sales_cart Performance Class has the highest rank and the erp_pc Performance Class has the lowest rank. The sales_pc Performance Class is ranked higher than the hr_pc Performance Class.

In this scenario, if the sales_pc workload surges, causing contention for resources and causing the sales_cart Performance Class to violate its Performance Objective, then this could lead to a service-level agreement (SLA) violation for the OLTP application. Oracle Database QoS Management issues a recommendation to increase access to the CPU for the sales_cart Performance Class at the expense of the sales_pc workload, because the sales_cart Performance Class is of a higher rank; a higher rank indicates that satisfying the Performance Objective for the sales_cart Performance Class is more important than satisfying the Performance Objective for the sales_pc Performance Class.

If, after you implement the recommendation, the sales_cart and sales_pc Performance Classes are still not satisfying their Performance Objectives, then Oracle Database QoS Management issues a recommendation to increase the number of servers in the Online server pool by moving a server from the Back Office server pool, or a server pool that hosts less critical workloads or workloads with more headroom. In this scenario, a server can be moved from the Back Office server pool, because the Back Office server pool is currently above its minimum size of one. If the Back Office server pool had a minimum size of two, then Oracle Database QoS Management would have to find an available server in a different server pool; Oracle Database QoS Management does not recommend to move a server from a server pool if doing so will cause a server pool to drop below its minimum size.

If you implement the recommended action, and your applications use Cluster Managed Services and Client Run-time Load Balancing, then the application users should not see a service disruption due to this reallocation. The services are shut down transactionally on the server being moved. After the server has been added to the stressed server pool, all database instances and their offered services are started on the reallocated server. At this point, sessions start to gradually switch to using the new server in the server pool, relieving the bottleneck.

Using the same scenario, if the sales_pc Performance Class and hr_pc Performance Class both require additional servers to meet their Performance Objectives, then Oracle Database QoS Management first issues recommendations to improve the performance of the sales_pc Performance Class, because the sales_pc Performance Class is ranked higher than the hr_pc Performance Class. When the sales_pc Performance Class is satisfying its Performance Objectives, then Oracle Database QoS Management makes recommendations to improve the performance of the hr_pc Performance Class.

Oracle Database QoS Management works with Oracle Real Application Clusters (Oracle RAC) and Oracle Clusterware. Oracle Database QoS Management operates over an entire Oracle RAC cluster, which can support a variety of applications.

This section contains the following topics:

Note:

Oracle Database QoS Management supports only OLTP workloads. The following types of workloads (or database requests) are not supported:Batch workloads

Workloads that require more than one second to complete

Workloads that use parallel data manipulation language (DML)

Workloads that query GV$ views at a signification utilization level

Oracle Database QoS Management manages the CPU resource for a cluster. Oracle Database QoS Management does not manage I/O resources, so I/O intensive applications are not managed effectively by Oracle Database QoS Management. Oracle Database QoS Management also monitors the memory usage of a server, and redirects connections away from that server if memory is over-committed.

Oracle Database QoS Management integrates with the Oracle RAC database through the following technologies to manage resources within a cluster:

Oracle Database QoS Management periodically evaluates the resource wait times for all used resources. If the average response time for the work requests in a Performance Class is greater than the value specified in its Performance Objective, then Oracle Database QoS Management uses the collected metrics to find the bottlenecked resource. If possible, Oracle Database QoS Management provides recommendations for adjusting the size of the server pools or making alterations to the consumer group mappings in the resource plan used by Oracle Database Resource Manager.

You create database services to provide a mechanism for grouping related work requests. An application connects to the cluster databases using database services. A user-initiated query against the database might use a different service than a web-based application. Different services can represent different types of work requests. Each call or request made to the Oracle RAC database is a work request.

You can also use database services to manage and measure database workloads. To manage the resources used by a service, some services may be deployed on several Oracle RAC instances concurrently, whereas others may be deployed on only a single instance to isolate the workload that uses that service.

In an Oracle RAC cluster, Oracle Database QoS Management monitors the server pools and its nodes, on which the database services are offered. Services are created by the database administrator for a database. For a policy-managed database, the service runs on all servers in the specified server pool. If a singleton service is required due to the inability of the application to scale horizontally, then the service can be restricted to run in a server pool that has a minimum and maximum size of one.

To use Oracle Database QoS Management, you must create one or more policy-managed databases that run in server pools. When you first configure Oracle Database QoS Management, a default Performance Policy is created for each service that is discovered on the server pools being monitored. The name of these default Performance Classes are service_name_pc. The workload you want to monitor and manage the resource for must use a database service to connect to the database.

See Also:

Oracle Real Application Clusters Administration and Deployment Guide for more information about servicesOracle Database Resource Manager (Resource Manager) is an example of a resource allocation mechanism; Resource Manager can allocate CPU shares among a collection of resource consumer groups based on a resource plan specified by an administrator. A resource plan allocates the percentage of opportunities to run on the CPU.

Oracle Database QoS Management does not adjust existing Resource Manager plans; Oracle Database QoS Management activates a resource plan named APPQOS_PLAN, which is a complex, multilevel resource plan. Oracle Database QoS Management also creates consumer groups that represent Performance Classes and resource plan directives for each consumer group.

When you implement an Oracle Database QoS Management recommendation to promote or demote a consumer group for a Performance Class, Oracle Database QoS Management makes the recommended changes to the mapping of the Performance Class to the CPU shares specified in the APPQOS_PLAN resource plan. By altering the consumer group, the Performance Class that is currently not meeting its Performance Objective is given more access to the CPU resource.

By default, the APPQOS_PLAN is replaced during the Oracle Scheduler maintenance window. Oracle recommends that you use the APPQOS_PLAN as the plan during those daily windows because this resource plan incorporates the consumer groups from the DEFAULT_MAINTENANCE_PLAN plan. You can force the use of APPQOS_PLAN by running the following commands in SQL*Plus:

BEGIN DBMS_SCHEDULER.DISABLE(name=>'"SYS"."MONDAY_WINDOW"'); END; / BEGIN DBMS_SCHEDULER.SET_ATTRIBUTE(name=>'"SYS"."MONDAY_WINDOW"', attribute=>'RESOURCE_PLAN',value=>'APPQOS_PLAN'); END; / BEGIN DBMS_SCHEDULER.ENABLE(name=>'"SYS"."MONDAY_WINDOW"'); END; /

Repeat these commands for every weekday, for example, TUESDAY_WINDOW.

See Also:

Oracle Database Administrator's Guide for more information about Resource Manager

Caution:

By default, any named user may create a server pool. To restrict the operating system users that have this privilege, Oracle strongly recommends that you add specific users to the CRS Administrators list. See Oracle Clusterware Administration and Deployment Guide for more information about adding users to the CRS Administrators list.You must have Oracle Clusterware installed and configured before you can use Oracle Database QoS Management. The CRS Administrator must create server pools to be used by policy-managed Oracle RAC databases.

When you first configure Oracle Database QoS Management and create the initial Policy Set, you specify which server pools should be managed by Oracle Database QoS Management and which should only be monitored. If you select a server pool to be managed by Oracle Database QoS Management, then Oracle Database QoS Management monitors the resources used by all the Performance Classes that run in that server pool. If a Performance Class is not satisfying its Performance Objective, then Oracle Database QoS Management can recommend moving servers between server pools to provide additional resources where needed.

Oracle Database QoS Management uses Oracle Cluster Health Monitor (CHM) to collect memory metric data for the servers in the cluster.

See Also:

Oracle Clusterware Administration and Deployment Guide for more information about policy-managed databases and server poolsRun-time connection load balancing enables Oracle Clients to provide intelligent allocations of connections in the connection pool when applications request a connection to complete some work; the decision of which instance to route a new connection to is based on the current level of performance provided by the database instances.

Applications that use resources managed by Oracle Database QoS Management can also benefit from connection load balancing and transparent application failover (TAF). Connection load balancing enables you to spread user connections across all of the instances that are supporting a service. For each service, you can define the method you want the listener to use for load balancing by setting the connection load balancing goal, using the appropriate SRVCTL command with the -j option. You can also specify a single TAF policy for all users of a service using SRVCTL with the options -m (failover method), -e (failover type), and so on.

See Also:

Oracle Real Application Clusters Administration and Deployment Guide for more information about run-time connection load balancing

Oracle Database Net Services Administrator's Guide for more information about configuring TAF

Enterprise database servers can use all available memory due to too many open sessions or runaway workloads. Running out of memory can result in failed transactions or, in extreme cases, a reboot of the server and the loss of a valuable resource for your applications. Oracle Database QoS Management detects memory pressure on a server in real time and redirects new sessions to other servers to prevent using all available memory on the stressed server.

When Oracle Database QoS Management is enabled and managing an Oracle Clusterware server pool, Cluster Health Monitor sends a metrics stream that provides real-time information about memory resources for the cluster servers to Oracle Database QoS Management. This information includes the following:

Amount of available memory

Amount of memory currently in use

Amount of memory swapped to disk for each server

If Oracle Database QoS Management determines that a node has memory pressure, then the database services managed by Oracle Clusterware are stopped on that node, preventing new connections from being created. After the memory stress is relieved, the services on that node are restarted automatically, and the listener starts sending new connections to that server. The memory pressure can be relieved in several ways (for example, by closing existing sessions or by user intervention).

Rerouting new sessions to different servers protects the existing workloads on the memory-stressed server and enables the server to remain available. Managing the memory pressure for servers adds a new resource protection capability in managing service levels for applications hosted on Oracle RAC databases.

Performance management and managing systems for high availability are closely related. Users typically consider a system to be up, or available, only when its performance is acceptable. You can use Oracle Database QoS Management and Performance Objectives to specify and maintain acceptable performance levels.

Oracle Database QoS Management is a run-time performance management product that optimizes resource allocations to help your system meet service-level agreements under dynamic workload conditions. Oracle Database QoS Management provides recommendations to help the work that is most critical to your business get the necessary resources. Oracle Database QoS Management assists in rebalancing resource allocations based upon current demand and resource availability. Nonessential work is suppressed to ensure that work vital to your business completes successfully.

Oracle Database QoS Management is not a feature to use for improving performance; the goal of Oracle Database QoS Management is to maintain optimal performance levels. Oracle Database QoS Management assumes that system parameters that affect both performance and availability have been set appropriately, and that they are constant. For example, the FAST_START_MTTR_TARGET database parameter controls how frequently the database writes the redo log data to disk. Using a low value for this parameter reduces the amount of time required to recover your database, but the overhead of writing redo log data more frequently can have a negative impact on the performance of your database. Oracle Database QoS Management does not make recommendations regarding the values specified for such parameters.

Management for high availability encompasses many issues that are not related to workload and that cannot be affected by managing workloads. For example, system availability depends crucially on the frequency and duration of software upgrade events. System availability also depends directly on the frequency of hardware failures. Managing workloads cannot change how often software upgrades are done or how often hardware fails.

See Also:

Oracle Real Application Clusters Administration and Deployment Guide for more information about using Oracle RAC for high availability

Oracle Database QoS Management bases its decisions on observations of how long work requests spend waiting for resources. Examples of resources that work requests might wait for include hardware resources, such as CPU cycles, disk I/O queues, and Global Cache blocks. Other waits can occur within the database, such as latches, locks, pins, and so on. Although the resource waits within the database are accounted for in the Oracle Database QoS Management metrics, they are not managed or specified by type.

The response time of a work request consists of execution time and a variety of wait times; changing or improving the execution time generally requires application source code changes. Oracle Database QoS Management therefore observes and manages only wait times.

Oracle Database QoS Management uses a standardized set of metrics, which are collected by all the servers in the system. There are two types of metrics used to measure the response time of work requests: performance metrics and resource metrics. These metrics enable direct observation of the wait time incurred by work requests in each Performance Class, for each resource requested, as the work request traverses the servers, networks, and storage devices that form the system. Another type of metric, the Performance Satisfaction Metric, measures how well the Performance Objectives for a Performance Class are being met.

Performance metrics are collected at the entry point to each server in the system. They give an overview of where time is spent in the system and enable comparisons of wait times across the system. Data is collected periodically and forwarded to a central point for analysis, decision making, and historical storage. See Figure 1-6, "Diagram of Oracle Database QoS Management Server Architecture" for an illustration of how the system data is collected.

Performance metrics measure the response time (the difference between the time a request comes in and the time a response is sent out). The response time for all database client requests in a Performance Class is averaged and presented as the average response time, measured as database requests per second.

See Also:

"Reviewing Performance Metrics"There are two resource metrics for each resource of interest in the system:

Resource usage time—measures how much time was spent using the resource for each work request

Resource wait time—measures the time spent waiting to get the resource

Resources are classified as CPU, Storage I/O, Global Cache, and Other (database waits). The data is collected from the Oracle RAC databases, Oracle Clusterware, and the operating system.

A useful metric for analyzing workload performance is a common and consistent numeric measure of how work requests in a Performance Class are doing against the current Performance Objective for that Performance Class. This numeric measure is called the Performance Satisfaction Metric.

Different performance objectives are used to measure the performance of workloads, as shown in the following table:

| Workload Type | Performance Objectives |

|---|---|

| OLTP | Response time, transactions for second |

| Batch | Velocity, throughput |

| DSS | Read or cache hit ratio, duration, throughput |

Oracle Database QoS Management currently supports only OLTP workloads. For OLTP workloads, you can only configure a response time performance objective.

The Oracle Database QoS Management metrics provide the information needed to systematically identify Performance Class bottlenecks in the system. When a Performance Class is violating its Performance Objective, the bottleneck for that Performance Class is the resource that contributes the largest average wait time for each work request in that Performance Class.

The Oracle Database QoS Management metrics are used to find a bottleneck for a Performance Class using the following steps:

Oracle Database QoS Management selects the highest ranked Performance Class that is not meeting its Performance Objective.

For that Performance Class, wait times for each resource are determined from the collected metrics.

The resource with the highest wait time per request is determined to be the bottlenecked resource.

Analyzing the average wait for each database request and the total number of requests for each Performance Class provides the resource wait time component of the response times of each Performance Class. The largest such resource contribution (CPU, Storage I/O, Global Cache, or Other) is the current bottleneck for the Performance Class.