Table of Contents

This chapter introduces MySQL InnoDB cluster, the various components of which are available from MySQL Downloads.

This documentation is in preproduction status and is intended for demonstration and preliminary use only. It may not be specific to the hardware on which you are using the software. Oracle Corporation and its affiliates are not responsible for and expressly disclaim all warranties of any kind with respect to this documentation and will not be responsible for any loss, costs, or damages incurred due to the use of this documentation.

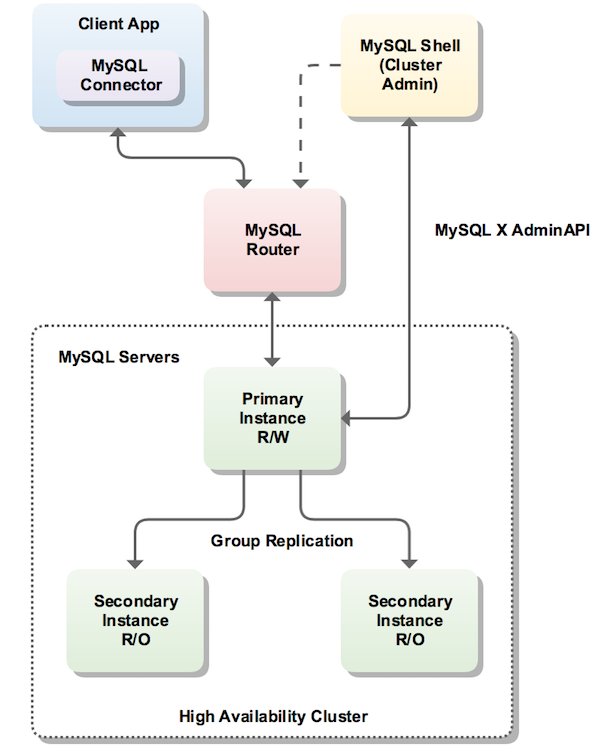

MySQL InnoDB cluster is a collection of products that work together to provide a high availability solution. A group of MySQL servers can be configured to create a cluster using MySQL Shell. The cluster of servers has a single master, called the primary, which acts as the read-write master. Multiple secondary servers are replicas of the master. A minimum of three servers are required to create a high availability cluster. A client application is connected to the primary via MySQL Router. If the primary fails, a secondary is automatically promoted to the role of primary, and MySQL Router routes requests to the new primary.

To implement a high availability solution InnoDB cluster uses the following MySQL technologies:

MySQL Shell 1.0.8 or higher. Includes the AdminAPI, which enables you to create and administer an InnoDB cluster, using either JavaScript or Python scripting. MySQL Shell also requires Python 2.7 and above to run cluster provisioning scripts.

MySQL Router 2.1.2 or higher. Caches the metadata of the InnoDB cluster and performs high availability routing to the MySQL Server instances which make up the cluster. If the primary instance becomes unavailable, MySQL Router automatically routes client requests to a promoted secondary (the new primary).

MySQL Server 5.7.17 or higher. This provides the Group Replication mechanism to allow data to be replicated from the primary to the secondaries in the cluster.

An overview of how these technologies work together is shown in the following diagram:

For more information about the current release versions of MySQL Shell, MySQL Router and MySQL Group Replication see:

For additional information about the AdminAPI available in MySQL Shell, see the JavaScript reference documentation.

AdminAPI is available as of MySQL Shell 1.0.8.

For more background information see Chapter 3, Using MySQL as a Document Store.

Installing MySQL InnoDB cluster means installing its separate components. This means downloading and installing the following:

MySQL Server 5.7.17 or higher. For details, see Chapter 2, Installing and Upgrading MySQL.

MySQL Router 2.1.2 or higher. For details, see Installation.

MySQL Shell 1.0.8 or higher. For details, see Section 3.3.1, “Installing MySQL Shell”.

This section explains how to use MySQL Shell with AdminAPI to set up an InnoDB cluster and configure MySQL Router to achieve high availability.

InnoDB cluster instances are created and managed through the

MySQL Shell. The MySQL Shell offers a specific Administrative

Module for this purpose, called dba, that is

automatically initialized at startup.

To create a new InnoDB cluster, the MySQL Shell must be connected to the MySQL Server instance. By default, this MySQL Server instance is the seed instance of the new InnoDB cluster and hold the initial data set.

This tutorial describes how to create three local sandbox instances, one primary and two secondaries, the minimum required to provide high availability.

MySQL Shell includes the AdminAPI, which provides the

dba global variable and its associated

methods. These dba methods help you to

administer your cluster, for example by using

dba.deploySandboxInstance() to add a sandbox

MySQL instance.

AdminAPI is available as of MySQL Shell 1.0.8.

To list all available dba commands, use the

dba.help() method. You can obtain detailed

information for a specific method using the general format

object.help('methodname'). For example:

mysql-js> dba.help('getCluster')

Retrieves a cluster from the Metadata Store.

SYNTAX

<Dba>.getCluster([name])

WHERE

name: Parameter to specify the name of the cluster to be returned.

DESCRIPTION

If name is not specified, the default cluster will be returned.

If name is specified, and no cluster with the indicated name is found, an error

will be raised.

Initially deploying and using local sandbox instances of MySQL is a good way to start your exploration of InnoDB cluster. You can fully test out InnoDB cluster locally, prior to deployment on your production servers. MySQL Shell has built in functionality for creating sandbox instances. MySQL Shell creates the sandbox instances correctly configured to work with Group Replication in a locally deployed clustered scenario.

Sandbox instance are only suitable for deploying and running on your local machine.

In a production environment the MySQL Server instances would be deployed on various hosts on the network. This is explained later in this guide.

The first step is to create sandbox MySQL Server instances using MySQL Shell.

A minimum of three instances are required to create an InnoDB cluster that is tolerant to the failure of one instance. If two instances leave the group unexpectedly, then the cluster is no longer highly available and capable of supporting writes, and reverts to read-only mode. A cluster of five nodes would be tolerant to the simultaneous failure of two instances. In the general case, the number of simultaneous failures that can be sustained while retaining functioning high availability is (nodes - 1)/2.

The dba module provides several functions for

administration of sandbox instances. For this example setup, you

create three sandbox instances. The AdminAPI provides a

function for that purpose:

dba.deploySandboxInstance().

In the following example, the MySQL Shell opens a Session to a server running on the local host at port 3310.

Start MySQL Shell from a command prompt by issuing the command:

shell> mysqlsh

MySQL Shell provides two scripting languages: JavaScript and Python.

The Python scripting language method naming conforms to the PEP-8 Style Guide for Python Code.

You can also write SQL code in a shell script file.

Throughout this guide you see MySQL Shell used primarily in JavaScript mode. For more information on MySQL Shell see Section 3.8, “MySQL Shell User Guide”

When MySQL Shell starts it is in JavaScript mode by default.

You switch into JavaScript mode, Python mode and SQL mode using

the commands \js, \py, and

\sql.

Ensure you are in JavaScript mode by issuing the

\js command, then execute:

mysql-js> dba.deploySandboxInstance(3310)

Semi-colons are not required at the end of the line in JavaScript mode.

The argument passed to

deploySandboxInstance() is the TCP port

number where the MySQL Server instance will listen for

connections. By default the sandbox is created in a directory

named

$HOME/mysql-sandboxes/

on Unix systems. For Microsoft Windows systems the directory is

port%userprofile%\MySQL\mysql-sandboxes\.

port

The root password for the instance is prompted for.

Each instance has its own password. Defining the same password for all sandboxes in this tutorial makes it easier, but remember to use different passwords for each instance on production systems.

Repeat the above command two more times using different port numbers:

mysql-js>dba.deploySandboxInstance(3320)mysql-js>dba.deploySandboxInstance(3330)

You now have three MySQL server sandbox instances running on ports 3310, 3320 and 3330.

Once a sandbox instance is running, it is possible to change its status at any time using the following:

Stop:

dba.stopSandboxInstance()Start:

dba.startSandboxInstance()Kill:

dba.killSandboxInstance()Kills the MySQL Server instance process on the local host, useful to help simulate an unexpected halt while testing failover.

Delete:

dba.deleteSandboxInstance()Completely removes the sandbox instance from your file system.

The next step is to create the InnoDB cluster while connected to the seed MySQL Server instance. The seed instance is the instance that you are connected to via MySQL Shell and that you want to replicate. In this example, the sandbox instances are blank instances, therefore we can choose any instance.

Connect MySQL Shell to the seed instance, in this case the one at port 3310:

mysql-js> \connect root@localhost:3310

The syntax \connect is a shortcut for the

MySQL Shell connect method shell.connect().

Alternatively use the following command:

mysql-js> shell.connect(root@localhost:3310)

Create the InnoDB cluster:

mysql-js> var cluster = dba.createCluster('test')

The parameter passed to the createCluster()

function is a symbolic name given to this InnoDB cluster. The

resulting InnoDB cluster is assigned to the

cluster variable. This function deploys the

metadata to the selected instance, configures it for Group

Replication and adds the instance as the seed of the new

InnoDB cluster.

After validating that the instance is properly configured, it is added to the InnoDB cluster as the seed instance and the replication subsystem is started.

The provided sandbox instances are pre-configured to work with

Group Replication, but if you use a pre-existing instance, it is

possible that some configuration options might not be set in a

compatible way. The createCluster() command

ensures that the settings are correct and if not, it changes

their values. If a change requires MySQL Server to be restarted,

you are prompted to restart it manually whenever convenient.

In summary, when dba.createCluster() is

executed, the following steps are carried out:

The InnoDB cluster Metadata Schema is created (if it does not already exist) or is updated to the latest version. Schema objects or columns are only added, never removed.

The new InnoDB cluster information, including the specified name and password, is inserted into the InnoDB cluster Metadata.

The seed instance (current session) is added to the InnoDB cluster as first instance of the Default ReplicaSet.

The seed instance information is inserted into the InnoDB cluster Metadata.

Once you have created a cluster you can obtain the cluster instance variable using a command such as:

mysql-js> var cluster = dba.getCluster("devCluster")

You specify the name of the cluster you wish to obtain the instance variable for. If you do not specify the name of the cluster the default cluster is returned.

The next step is to add replicas to the InnoDB cluster. Any transactions that were executed by the seed instance are re-executed by each replica as they are added. To demonstrate this you use the sandbox instances that you created earlier.

The seed instance in this example was recently created, so it is nearly empty and had replication enabled when it was created. Therefore, there is little data that needs to be replicated from the primary to the secondaries. In a production environment, where you have an existing database on the seed instance, you could use a tool such as MySQL Enterprise Backup to ensure that the secondaries have matching data before replication starts. This avoids the possibility of lengthy delays while data replicates from the primary to the secondaries. Once the cluster is formed, writes to the primary result in data being replicated to the secondaries.

Add the second instance to the InnoDB cluster:

mysql-js> cluster.addInstance('root@localhost:3320')

The root user's password is prompted for.

Add the third instance:

mysql-js> cluster.addInstance('root@localhost:3330')

The root user's password is prompted for.

At this point you have created a high availability cluster with three instances: a primary, and two secondaries.

You could have added additional details to the logs when adding an instance to a cluster. Pass in 'verbose' to enable additional logging, so our last example would have looked like this:

mysql-js> cluster.addInstance('root@localhost:3330', {verbose: true})

You can only specify localhost in

addInstance() if the instance is a sandbox

instance. This also applies to the implicit

addInstance() after issuing

createCluster().

You can remove an instance from a cluster at any time should you

wish to do so. This can be done with the

removeInstance() method, as in the following

example:

mysql-js> cluster.removeInstance("192.168.1.1:3306")

With three instances now in our InnoDB cluster sandbox, use

cluster.status() to check its status:

mysql-js> cluster.status()

This retrieves the current InnoDB cluster status and outputs a status report. There are several attributes, including the following:

The instance status is either

ONLINE, OFFLINE,

RECOVERING, UNREACHABLE,

or (MISSING).

ONLINE: The instance is online.OFFLINE: The instance may have lost connection to the other instances.RECOVERING: The instance is receiving updates from the seed instance and should eventually switch toONLINE.UNREACHABLE: The instance has lost communication with the cluster.(MISSING): The state of an instance which belongs to a cluster's metadata, but is not currently active on the corresponding Group Replication group.

The mode indicates either

R/W (read and writable) or

R/O (read only). Only the instance marked

"R/W" can execute transactions that update the database, so it

is the PRIMARY. If that instance becomes unreachable for any

reason (like an unexpected halt), one of the remaining "R/O"

instances automatically takes over its place and becomes the new

"R/W" PRIMARY.

To check the status of the InnoDB cluster at a later time, you

can get a reference to the InnoDB cluster object by connecting

to any of its instances. However, if you want to make changes to

the InnoDB cluster, you must connect to the

PRIMARY. For information about how the

InnoDB cluster is running, use the status()

method:

mysql-js>var cluster = dba.getCluster()mysql-js>cluster.status(){ "clusterName": "test", "defaultReplicaSet": { "status": "Cluster tolerant to up to ONE failure.", "topology": { "localhost:3310": { "address": "localhost:3310", "status": "ONLINE", "role": "HA", "mode": "R/W", "leaves": { "localhost:3320": { "address": "localhost:3320", "status": "ONLINE", "role": "HA", "mode": "R/O", "leaves": {} }, "localhost:3330": { "address": "localhost:3330", "status": "ONLINE", "role": "HA", "mode": "R/O", "leaves": {} } } } } } }

As the above output demonstrates, status information includes the InnoDB cluster name, topology, default ReplicaSet, PRIMARY, and more.

To get information about the structure of the InnoDB cluster

itself, use the cluster.describe() function:

mysql-js> cluster.describe();

{

"clusterName": "test",

"adminType": "local",

"defaultReplicaSet": {

"name": "default",

"instances": [

{

"name": "localhost:3310",

"host": "localhost:3310",

"role": "HA"

},

{

"name": "localhost:3320",

"host": "localhost:3320",

"role": "HA"

},

{

"name": "localhost:3330",

"host": "localhost:3330",

"role": "HA"

}

]

}

}

The output from this function shows the structure of the InnoDB cluster including all of its configuration information, ReplicaSets and Instances.

If an instance leaves the cluster, for example because it lost connection and did not or could not automatically rejoin the cluster, it may be necessary to rejoin it to the cluster at a later stage. Because the Group Replication configuration is not stored in the configuration file, restarting an instance causes it to leave the Replication Group, so it must rejoin to add the instance back into the Default ReplicaSet.

The command to rejoin an instance to a cluster is

cluster.rejoinInstance().

In the case where an instance has been configured using

dba.configureLocalInstance(), its Group

Replication information is persisted to the configuration file,

and will rejoin the cluster automatically. More information on

this can be found in the section

Configuring the Instance.

In order for client applications to handle failover, they need

to be aware of the InnoDB cluster topology. They also need to

know which instance is the PRIMARY. While it

is possible for applications to implement that logic, MySQL Router

can provide this functionality for you.

The recommended deployment of MySQL Router is on the same host as the application. In this tutorial, everything is running on a single host, so you deploy MySQL Router to the same host.

Assuming MySQL Router is already installed, the only required step is to bootstrap it with the location of the metadata server. The following does this, and uses all defaults:

shell> mysqlrouter --bootstrap root@localhost:3310

Please enter MySQL password for root:

MySQL Router needs to create a InnoDB cluster metadata client account.

To allow secure storage of its password, please provide an encryption key.

To generate a random encryption key to be stored in a local obscured file,

and allow the router to start without interaction, press Return to cancel

and use the --master-key-path option to specify a file location.

Please provide an encryption key:

Please confirm encryption key:

Bootstrapping system MySQL Router instance...

MySQL Router has now been configured for the InnoDB cluster 'test'.

The following connection information can be used to connect to the cluster.

Classic MySQL protocol connections to cluster 'test':

- Read/Write Connections: localhost:6446

- Read/Only Connections: localhost:6447

X protocol connections to cluster 'test':

- Read/Write Connections: localhost:64460

- Read/Only Connections: localhost:64470

You are prompted for the instance password and encryption key for MySQL Router to use. This encryption key is used to encrypt the instance password used by MySQL Router to connect to the cluster. The ports you can use to connect to the InnoDB cluster are also displayed.

Currently only Classic Protocol connections are supported between MySQL Router and InnoDB cluster.

MySQL Router connects to the InnoDB cluster, fetches its metadata

and configures itself for use. The generated configuration

creates 2 TCP ports: one for read-write sessions (which redirect

connections to the PRIMARY) and one for

read-only sessions (which redirect connections to one of the

SECONDARY instances).

Once bootstrapped and configured, start MySQL Router (or set up a service for it to start automatically when the system boots):

shell> mysqlrouter &

You can now connect a MySQL client, such as MySQL Shell to one

of the incoming MySQL Router ports and see how the client gets

transparently connected to one of the InnoDB cluster

instances. To see which instance you are actually connected to,

simply query the port status variable.

shell>mysqlsh --uri root@localhost:6442mysql-js>\sqlSwitching to SQL mode... Commands end with ; mysql-sql>select @@port;+--------+ | @@port | +--------+ | 3310 | +--------+ 1 row in set (0.00 sec)

When MySQL Router is bootstrapped it records the bootstrap server

addresses in its configuration. These servers contain metadata

used my MySQL Router in order to route correctly. If additional

nodes are now added to the cluster, MySQL Router uses them. If

however, all of the original metadata servers go offline for

some reason, MySQL Router would no longer be able to route

correctly. Consider the following line in a

mysqlrouter.conf file:

... bootstrap_server_addresses=mysql://192.168.56.101:3310,mysql://192.168.56.101:3320,mysql://192.168.56.101:3330 ...

There are three original metadata servers specified here. Now if two additional servers (call them D and E) were added, you would have a five node cluster, and MySQL Router routes to these additional nodes as required. If the original metadata nodes, A, B and C, now went down over a period of time, you would be left with only nodes D and E running. At this point, nodes D and E are still alive and form a quorum. So it should be possible to route calls to them. However, as all original metadata servers are down (nodes A, B and C), MySQL Router shuts off all routing.

To test if failover works, simulate an unexpected halt by

killing the PRIMARY instance using the

dba.killSandboxInstance() function and check

that one of the other instances takes over automatically.

mysql-js> dba.killSandboxInstance(3310)

Then you can again check which instance you are connected to.

The first SELECT fails as the connection to

the original PRIMARY was lost. MySQL Shell

automatically reconnects for you and when you issue the command

again the new port is confirmed.

mysql-js>\sqlSwitching to SQL mode... Commands end with ; mysql-sql>select @@port;ERROR: 2013 (HY000): Lost connection to MySQL server during query The global session got disconnected. Attempting to reconnect to 'root@localhost:6446'... The global session was successfully reconnected. mysql-sql>select @@port;+--------+ | @@port | +--------+ | 3330 | +--------+ 1 row in set (0.00 sec)

This shows that the InnoDB cluster provided us with automatic

failover, that MySQL Router has automatically reconnected us to the

new PRIMARY instance, and that we have high

availability.

You can bring the instance that you killed back online.

mysql-js>dba.startSandboxInstance(3310)mysql-js>cluster.rejoinInstance('root@localhost:3310')mysql-js>cluster.status()

If you want to remove all information associated with a cluster,

you can use the cluster.dissolve() method.

This removes all metadata and configuration associated with the

cluster. Once you have dissolved the cluster you need to create

it again from scratch, using

dba.createCluster().

After calling cluster.dissolve(), the

cluster object is no longer valid.

You can automate cluster configuration with scripts. For example:

shell> mysqlsh -f setup-innodb-cluster.js

Any command line options specified after the script file name

are passed to the script and not to

MySQL Shell. You can access those options using the

os.argv array in JavaScript, or the

sys.argv array in Python. In both cases,

the first option picked up in the array is the script name.

The contents for an example script file is shown here:

print('MySQL InnoDB cluster sandbox set up\n');

print('==================================\n');

print('Setting up a MySQL InnoDB cluster with 3 MySQL Server sandbox instances.\n');

print('The instances will be installed in ~/mysql-sandboxes.\n');

print('They will run on ports 3310, 3320 and 3330.\n\n');

var dbPass = shell.prompt('Please enter a password for the MySQL root account: ', {type:"password"});

try {

print('\nDeploying the sandbox instances.');

dba.deploySandboxInstance(3310, {password: dbPass});

print('.');

dba.deploySandboxInstance(3320, {password: dbPass});

print('.');

dba.deploySandboxInstance(3330, {password: dbPass});

print('.\nSandbox instances deployed successfully.\n\n');

print('Setting up InnoDB cluster...\n');

shell.connect('root@localhost:3310', dbPass);

var cluster = dba.createCluster("devCluster");

print('Adding instances to the cluster.');

cluster.addInstance({user: "root", host: "localhost", port: 3320, password: dbPass});

print('.');

cluster.addInstance({user: "root", host: "localhost", port: 3330, password: dbPass});

print('.\nInstances successfully added to the cluster.');

print('\nInnoDB cluster deployed successfully.\n');

} catch(e) {

print('\nThe InnoDB cluster could not be created.\n\nError: ' +

+ e.message + '\n');

}

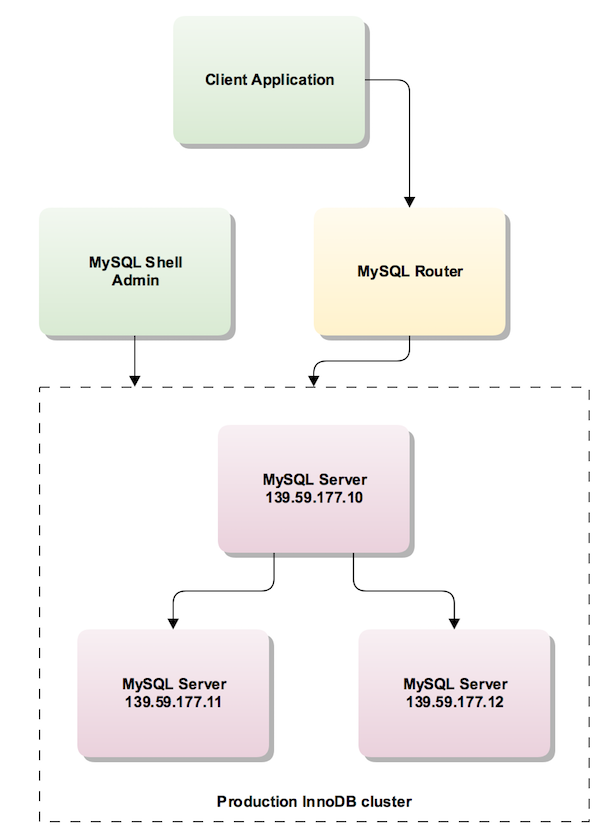

When working in a production environment, the MySQL Server instances are running on hosts as part of a network rather than on your local machine as described in previous sections.

The following diagram illustrates the scenario you work with in the following section:

The user account used to administer an instance does not have to

be the root account, however the user needs to be assigned full

read and write privileges on the Metadata tables in addition to

full MySQL administrator privileges (SUPER,

GRANT OPTION, CREATE,

DROP and so on).

When working with a production deployment it is a good idea to activate verbose logging for MySQL Shell initially. This is helpful in finding and resolving any issues that may arise when you are preparing the server to work as part of InnoDB cluster. To start MySQL Shell with a verbose logging level type:

shell> mysqlsh --log-level=8

The log file is located in

~/.mysqlsh/mysqlsh.log for Unix-based

systems. On Microsoft Windows systems it is located in

%APPDATA%\MySQL\mysqlsh\mysqlsh.log.

The cluster.checkInstanceState() function can

be used for the following purposes:

To validate if an instance can be joined to the cluster.

The clone is consistent with the seed instances and can be recovered to the same state.

Validate if the server instance transactions are compatible with the servers belonging to the Default ReplicaSet.

Before creating a cluster from remote instances you need to

check that the servers are suitably configured. This can be done

using the dba.checkInstanceConfiguration()

function. For detailed help on this function you can type

dba.help('checkInstanceConfiguration').

The dba.checkInstanceConfiguration() function

checks if the server instances are valid for InnoDB cluster

usage.

The following demonstrates this:

mysql-js> dba.checkInstanceConfiguration('user@139.59.177.10:3306')

Please provide the password for 'user@139.59.177.10:3306':

Validating instance...

The instance '139.59.177.10:3306' is not valid for Cluster usage.

The following issues were encountered:

- Some configuration options need to be fixed.

+----------------------------------+---------------+----------------+--------------------------------------------------+

| Variable | Current Value | Required Value | Note |

+----------------------------------+---------------+----------------+--------------------------------------------------+

| binlog_checksum | CRC32 | NONE | Update the server variable or restart the server |

| enforce_gtid_consistency | OFF | ON | Restart the server |

| gtid_mode | OFF | ON | Restart the server |

| log_bin | 0 | 1 | Restart the server |

| log_slave_updates | 0 | ON | Restart the server |

| master_info_repository | FILE | TABLE | Restart the server |

| relay_log_info_repository | FILE | TABLE | Restart the server |

| transaction_write_set_extraction | OFF | XXHASH64 | Restart the server |

+----------------------------------+---------------+----------------+--------------------------------------------------+

Please fix these issues , restart the server and try again.

{

"config_errors": [

{

"action": "server_update",

"current": "CRC32",

"option": "binlog_checksum",

"required": "NONE"

},

{

"action": "restart",

"current": "OFF",

"option": "enforce_gtid_consistency",

"required": "ON"

},

{

"action": "restart",

"current": "OFF",

"option": "gtid_mode",

"required": "ON"

},

{

"action": "restart",

"current": "0",

"option": "log_bin",

"required": "1"

},

{

"action": "restart",

"current": "0",

"option": "log_slave_updates",

"required": "ON"

},

{

"action": "restart",

"current": "FILE",

"option": "master_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "FILE",

"option": "relay_log_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "OFF",

"option": "transaction_write_set_extraction",

"required": "XXHASH64"

}

],

"errors": [],

"restart_required": true,

"status": "error"

}

mysql-js>

The report shows the configuration changes required for that instance before it can be added to the cluster.

Once the configuration issues have been identified you can reconfigure your server instance manually. Alternatively, if you have the ability to run MySQL Shell locally on the remote server, log in locally to the server and run MySQL Shell to configure the server. On the server to be configured run:

shell> mysqlsh --log-level=8

The function you use to configure a server for InnoDB cluster

use is dba.configureLocalInstance(). This

function runs provisioning scripts for you that modify the MySQL

server's configuration file.

The provisioning scripts that MySQL Shell uses to configure

servers for use in InnoDB cluster require access to Python

(2.7 and above). You can perform a quick check that your

system has Python configured correctly by typing $

/usr/bin/env python. If your Python interpreter

starts, no further action is required. If the previous command

fails with 'python' can't be found, you may have to create a

soft link between /usr/bin/python and your

chosen Python binary, for example,

/usr/bin/python2.7. This can be the case on

systems that have both Python 2.7 and Python 3.x installed.

The dba.configureLocalInstance() function can

only configure servers connected to locally. If you try to run

dba.configureLocalInstance() remotely you get

the following error:

mysql-js> dba.configureLocalInstance('user@139.59.177.10:3306')

Dba.configureLocalInstance: This function only works with local instances (RuntimeError)

If MySQL Shell is started locally, then output will be similar to:

mysql-js> dba.configureLocalInstance('root@localhost:3306')

Please provide the password for 'root@localhost:3306':

Please specify the path to the MySQL configuration file: /etc/mysql/mysql.conf.d/mysqld.cnf

Validating instance...

The configuration has been updated but it is required to restart the server.

{

"config_errors": [

{

"action": "restart",

"current": "OFF",

"option": "enforce_gtid_consistency",

"required": "ON"

},

{

"action": "restart",

"current": "OFF",

"option": "gtid_mode",

"required": "ON"

},

{

"action": "restart",

"current": "0",

"option": "log_bin",

"required": "1"

},

{

"action": "restart",

"current": "0",

"option": "log_slave_updates",

"required": "ON"

},

{

"action": "restart",

"current": "FILE",

"option": "master_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "FILE",

"option": "relay_log_info_repository",

"required": "TABLE"

},

{

"action": "restart",

"current": "OFF",

"option": "transaction_write_set_extraction",

"required": "XXHASH64"

}

],

"errors": [],

"restart_required": true,

"status": "error"

}

mysql-js>

As with dba.checkInstanceConfiguration(), the

configuration requirements are identified, but this time the

entered configuration file is modified. For the changes to take

effect you need to restart the MySQL Server. For example:

shell> sudo service mysql restart

If dba.configureLocalInstance() is used on

a node that is already a member of a cluster, then its Group

Replication configuration information is persisted to the

server configuration file and a call to

rejoinInstance() is not required in that

case. When restarted, the instance is automatically join the

cluster. This is illustrated in the following example:

shell.connect({host: 'localhost', port: 3333, user: 'root', password: 'somePwd'});

var cluster = dba.createCluster('devCluster');

// Here, configureLocalInstance makes sure the instance is configured for GR

dba.configureLocalInstance('localhost:3334', {password:'somePwd', mycnfPath:'some path'})

cluster.addInstance('localhost:3334', {password:'somePwd'})

dba.configureLocalInstance('localhost:3335', {password:'somePwd', mycnfPath:'some path'})

cluster.addInstance('localhost:3335', {password:'somePwd'})

// A restart here, would require using rejoin to put the instance back into the cluster

dba.killSandboxInstance(3335);

dba.startSandboxInstance(3335);

cluster.rejoinInstance('localhost:3335', {password:'somePwd'})

// Calling configureLocalInstance again, since the instance is already part of the cluster

// It will persist the GR server variables

dba.configureLocalInstance('localhost:3335', {password:'somePwd', mycnfPath:'some path'})

// On a new restart, the instance automatically joins the Cluster (no need to rejoinInstance)

dba.killSandboxInstance(3335);

dba.startSandboxInstance(3335);

Once the server has restarted, you can use MySQL Shell again to check the configuration:

mysql-js> dba.checkInstanceConfiguration('root@localhost:3306')

Please provide the password for 'root@localhost:3306':

Validating instance...

The instance 'localhost:3306' is valid for Cluster usage

{

"status": "ok"

}

mysql-js>

You can now log in from your remote MySQL Shell instance.

shell> mysqlsh --uri user@139.59.177.10:3306

Creating a Session to 'user@139.59.177.10:3306'

Enter password: *********

Classic Session successfully established. No default schema selected.

Now create the cluster:

mysql-js> var cluster = dba.createCluster('devCluster');

A new InnoDB cluster will be created on instance 'user@139.59.177.10:3306'.

Creating InnoDB cluster 'devCluster' on 'user@139.59.177.10:3306'...

Adding Seed Instance...

Cluster successfully created. Use Cluster.addInstance() to add MySQL instances.

At least 3 instances are needed for the cluster to be able to withstand up to

one server failure.

First, check the instance configuration:

mysql-js> dba.checkInstanceConfiguration('user@139.59.177.10:3306')

Please provide the password for 'user@139.59.177.10:3306':

Validating instance...

The instance '139.59.177.10:3306' is valid for Cluster usage

{

"status": "ok"

}

You can also check the instance state:

mysql-js> cluster.checkInstanceState('user@139.59.177.10:3306')

Please provide the password for 'user@139.59.177.10:3306':

Analyzing the instance replication state...

The instance '139.59.177.10:3306' is valid for the cluster.

The instance is fully recoverable.

{

"reason": "recoverable",

"state": "ok"

}

Check the cluster status:

mysql-js> cluster.status()

{

"clusterName": "devCluster",

"defaultReplicaSet": {

"name": "default",

"status": "Cluster is NOT tolerant to any failures.",

"topology": {}

}

}

You need to add two more instances to the cluster to make it tolerant to a server failure.

Check the configuration of the next instance to add to the cluster:

mysql-js> dba.checkInstanceConfiguration('user@139.59.177.11:3306')

Please provide the password for 'user@139.59.177.10:3306':

Validating instance...

The instance '139.59.177.11:3306' is valid for Cluster usage

{

"status": "ok"

}

The instance can now be added into the cluster:

mysql-js> cluster.addInstance("user@139.59.177.11:3306");

Please provide a password for 'user@139.59.177.11:3306': *****

A new instance will be added to the InnoDB cluster. Depending on the

amount of data on the cluster this might take from a few seconds to

several hours.

Adding instance 139.59.177.11:3306 to the cluster...

The instance '139.59.177.11:3306' was successfully added to the

cluster.

The next instance can now be added into the cluster:

mysql-js> cluster.addInstance("user@139.59.177.12:3306");

Please provide a password for 'user@139.59.177.12:3306': *****

A new instance will be added to the InnoDB cluster. Depending on the

amount of data on the cluster this might take from a few seconds to

several hours.

Adding instance 139.59.177.12:3306 to the cluster...

The instance '139.59.177.12:3306' was successfully added to the

cluster.

Now recheck cluster status.

When using the createCluster(),

addInstance(), and

rejoinInstance() methods you can optionally

specify a list of approved servers that belong to the cluster,

referred to a whitelist. By specifying the whitelist explicitly

in this way you can increase the security of your cluster

because only servers in the whitelist can connect to the

cluster.

By default, if not specified explicitly, the whitelist is

automatically set to the private network addresses that the

server has network interfaces on. To configure the whitelist,

specify the servers to add with the

ipWhitelist option when using the method. For

example:

mysql-js> c.addInstance("root:guidev!@localhost:3320", {ipWhitelist: "10.157.120.0/24, 192.168.1.110"})

This configures the instance to only accept connections from

servers at addresses 10.157.120.0/24 and

192.168.1.110.

Using the ipWhitelist option configures the

group_replication_ip_whitelist

system variable on the instance.

If a node (or nodes) fail, then a cluster can lose its quorum,

which is the ability to vote in a new primary. In this case you

can re-establish quorum using the method

cluster.forceQuorumUsingPartitionOf(), as

shown in the following MySQL Shell example:

// open session to a cluster mysql-js>cluster = dba.getCluster("devCluster")// The cluster lost its quorum and its status shows // "status": "NO_QUORUM" mysql-js>cluster.forceQuorumUsingPartitionOf("localhost:3310")Restoring replicaset 'default' from loss of quorum, by using the partition composed of [localhost:3310] Please provide the password for 'root@localhost:3310': ****** Restoring the InnoDB cluster ... The InnoDB cluster was successfully restored using the partition from the instance 'root@localhost:3310'. WARNING: To avoid a split-brain scenario, ensure that all other members of the replicaset are removed or joined back to the group that was restored.

If your cluster suffers from a complete outage, you can ensure

it is reconfigured correctly using

dba.rebootClusterFromCompleteOutage(). An

example of use is as follows:

mysql-js> shell.connect('root@localhost:3310');

mysql-js> var cluster = dba.rebootClusterFromCompleteOutage();

This ensures the cluster is correctly reconfigured after a complete outage. It picks the instance the MySQL Shell is connected to as the new seed instance and recovers the cluster based on the existing metadata of that instance.

It is also possible to provide the cluster name as an input parameter:

mysql-js>var cluster = dba.createCluster("devCluster")... ... mysql-js>var cluster = dba.rebootClusterFromCompleteOutage("devCluster");

If this process fails, and the cluster metadata has become badly

corrupted, you may need to drop the metadata and create the

cluster again from scratch. You can drop the cluster metadata

using dba.dropMetaDataSchema().

The dba.dropMetaDataSchema() method should

only be used as a last resort, when it is not possible to

restore the cluster. It can not be undone.

If changes to the Group Replication configurations are made

without using MySQL Shell you need to rescan your cluster. For

example, if you create a cluster with three instances, and then

without using MySQL Shell you add a new instance to that Group

Replication group, the AdminAPI is not aware of that

instance. The same would apply if you removed an instance from a

Group Replication group without using MySQL Shell. It is

necessary to rescan the cluster with

cluster.rescan() in such scenarios.

After the command cluster.rescan() has been

run, nodes are identified that are newly discovered instances.

You are prompted to add each of these newly discovered nodes

into your cluster as required, or you can choose to ignore them.

Nodes that no longer belong to the cluster or which are

unavailable are also reported. In this case you are prompted to

remove the node, or you can later attempt to add it back into

the cluster using a command such as

cluster.rejoin('nodex.example.com:3340').

If you have an existing deployment of Group Replication and you

want to manage it using the MySQL Shell, the option

adoptFromGR from the

dba.createCluster() function can be used.

If you have an open session to one of the members of the cluster,

you can use the cluster.rescan() function to

create and populate the cluster metadata. The

rescan() function discovers new instances and

reports instances that have left the cluster. You are prompted to

add nodes into the cluster, or remove nodes from the cluster, as

you require.

shell> mysqlsh --uri root@192.168.0.11:3306

Creating a Session to 'root@192.168.0.11:3306'

Enter password: ****

Classic Session successfully established. No default schema selected.

MySQL Shell JavaScript Code:

mysql-js> var cluster = dba.createCluster('prodCluster', {adoptFromGR: true});

A new InnoDB cluster will be created on instance 'root@192.168.0.11:3306'.

Creating InnoDB cluster 'prodCluster' on 'root@192.168.0.11:3306'...

Adding Seed Instance...

Cluster successfully created. Use cluster.addInstance() to add MySQL instances.

At least 3 instances are needed for the cluster to be able to withstand up to

one server failure.

mysql-js> cluster.describe();

{

"clusterName": "prodCluster",

"adminType": "local",

"defaultReplicaSet": {

"name": "default",

"instances": [

{

"name": "localhost:3306",

"host": "localhost:3306",

"role": "HA"

},

{

"name": "localhost:3307",

"host": "localhost:3307",

"role": "HA"

},

{

"name": "localhost:3308",

"host": "localhost:3308",

"role": "HA"

}

]

}

}